Simple TypeScript type definitions for AMD modules

I wanted to write a TypeScript type definition file for a JavaScript module that I wrote last year, so that I could use it from within TypeScript in a seamless manner - with argument and return type annotations present. I considered porting it to TypeScript but since all that I really wanted was the type annotations, it seemed like a type definition file would be just the job and prevent me from maintaining the code in two languages (well, three, actually, since I originally ported it from C#).

The module in question is the CSS Parser that I previously wrote about porting (see The C# CSS Parser in JavaScript), it's written to be referenced directly in the browser as a script tag or to be loaded asynchronously (which I also wrote about in JavaScript dependencies that work with Brackets, Node and in-browser).

I wanted to write a type definition to work with the AMD module loading that TypeScript supports. And this is where I came a bit unstuck.

I must admit that, writing this now, it seems that nothing that I'm about to cover is particularly complicated or confusing - it's just that when I tried to find out how to do it, I found it really difficult! The DefinitelyTyped GitHub repo seemed like it should be a good start, since surely it would cover any use case I could thing of.. but it was also difficult to know where to start since I couldn't think of any packages that I knew, that would support AMD and whose type definitions would be small enough that I would be able to understand them by just trying to stare them down.

There is an official TypeScript article that is commonly linked to by Stack Overflow answers: Writing Definition (.d.ts) Files, but this seems to take quite a high level view and I couldn't work out how to expose my module's functionality in an AMD fashion.

The short answer

In my case, I basically had a module of code that exposed functions. Nothing needed to be instantiated in order to calls these functions, they were just available.

To reduce it down to the most simple case, imagine that my module only exposed a single function "GetLength" that took a single parameter of type string and returned a value of type number, the type definition would then be:

declare module SimpleExample {

export function GetLength(content: string): number;

}

export = SimpleExample;

This allows for the module to be used in TypeScript elsewhere with code such as

import simpleExample = require("simpleExample");

console.log(simpleExample.GetLength("test"));

So easy! So straight-forward! And yet it seemed like it took me a long time to get to this point :(

One of the problems I struggled with is that there are multiple ways to express the same thing. The following seemed more natural to me, in a way -

interface SimpleExample {

GetLength(content: string): number;

}

declare var simpleExampleInstance: SimpleExample;

export = simpleExampleInstance;

It is common for a module to build up an instance to export as the AMD interface and the arrangement above does, in fact, explicitly describe the module as containing an instance that implements a specified interface. This interface is what consuming TypeScript code will work against.

Side note: It doesn't matter what name is given to "simpleExampleInstance" since it is just a variable that is being directly exported.

In this simple case, the TypeScript example still works - the module may be consumed and the "GetLength" method may be called as expected. It is only when things become more complicated (as we shall see below) that this approach becomes troublesome (meaning we will see that the "declare module" approach turns out to be a better way to do things).

Implementation details

So that's a simple example, now to get back to the case I was actually working on. The first method that I want to expose is "ParseCss". This takes in a string and returns an array of "categorised character strings" - these are strings of content with a "Value" string, an "IndexInSource" number and a "CharacterCategorisation" number. So the string

body { color: red; }

is broken down into

Value: "body", IndexInSource: 0, CharacterCategorisation: 4

Value: " ", IndexInSource: 4, CharacterCategorisation: 7

Value: "{", IndexInSource: 5, CharacterCategorisation: 2

Value: " ", IndexInSource: 6, CharacterCategorisation: 7

Value: "color", IndexInSource: 7, CharacterCategorisation: 4

Value: ":", IndexInSource: 12, CharacterCategorisation: 5

Value: " ", IndexInSource: 13, CharacterCategorisation: 7

Value: "red", IndexInSource: 14, CharacterCategorisation: 6

Value: ";", IndexInSource: 17, CharacterCategorisation: 3

Value: " ", IndexInSource: 18, CharacterCategorisation: 7

Value: "}", IndexInSource: 19, CharacterCategorisation: 1

The CharacterCategorisation values come from a enum-like type in the library; an object named "CharacterCategorisationOptions" with properties named "Comment", "CloseBrace", "OpenBrace", etc.. that are mapped onto numeric values. These values are an ideal candidates for representation by the TypeScript "const enum" construct - and since there are a fixed set of values it's no problem to explicitly include them in the type definition. (Note that the "const enum" was introduced with TypeScript 1.4 and is not available in previous versions).

This leads to the following:

declare module CssParser {

export function ParseCss(content: string): CategorisedCharacterString[];

export interface CategorisedCharacterString {

Value: string;

IndexInSource: number;

CharacterCategorisation: CharacterCategorisationOptions;

}

export const enum CharacterCategorisationOptions {

Comment = 0,

CloseBrace = 1,

OpenBrace = 2,

SemiColon = 3,

SelectorOrStyleProperty = 4,

StylePropertyColon = 5,

Value = 6,

Whitespace = 7

}

}

export = CssParser;

This is the first point at which the alternate "interface" approach that I mentioned earlier starts to fall apart - it is not possible to nest the enum within the interface, TypeScript will give you compile warnings. And if it is not nested within the interface then it can't be explicitly exported from the module and could not be be accessed from calling code.

To try to make this a bit clearer, what we could do is

interface CssParser {

ParseCss(content: string): CategorisedCharacterString[];

}

interface CategorisedCharacterString {

Value: string;

IndexInSource: number;

CharacterCategorisation: CharacterCategorisationOptions;

}

declare const enum CharacterCategorisationOptions {

Comment = 0,

CloseBrace = 1,

OpenBrace = 2,

SemiColon = 3,

SelectorOrStyleProperty = 4,

StylePropertyColon = 5,

Value = 6,

Whitespace = 7

}

declare var parser: CssParser;

export = parser;

and then we could consume this with

import parser = require("cssparser/CssParserJs");

var content = parser.ParseCss("body { color: red; }");

but we could not do something like

var firstFragmentIsWhitespace =

(content[0].CharacterCategorisation === parser.CharacterCategorisationOptions.Whitespace);

since the "CharacterCategorisationOptions" type is not exported from the module.

Using the "declare module" approach allows us to nest the enum in that module which then is exported and then so can be accessed by the calling code.

The same applies to exporting nested classes. Which leads me on to the next part of the parser interface - if the parsing method encounters content that it can not parse then it will throw a "ParseError". This error class has "name" and "message" properties like any other JavaScript Error but it has an additional "indexInSource" property to indicate where the troublesome character(s) occurred.

The type definition now looks like

declare module CssParser {

export function ParseCss(content: string): CategorisedCharacterString[];

export interface CategorisedCharacterString {

Value: string;

IndexInSource: number;

CharacterCategorisation: CharacterCategorisationOptions;

}

export const enum CharacterCategorisationOptions {

Comment = 0,

CloseBrace = 1,

OpenBrace = 2,

SemiColon = 3,

SelectorOrStyleProperty = 4,

StylePropertyColon = 5,

Value = 6,

Whitespace = 7

}

export class ParseError implements Error {

constructor(message: string, indexInSource: number);

name: string;

message: string;

indexInSource: number;

}

}

export = CssParser;

There are complications around extending the Error object in both JavaScript and TypeScript, but I don't need to worry about that here since the library deals with it, all I need to do is describe the library's interface.

This type definition now supports the following consuming code -

import parser = require("cssparser/CssParserJs");

try {

var content = parser.ParseCss("body { color: red; }");

console.log("Parsed into " + content.length + " segment(s)");

}

catch (e) {

if (e instanceof parser.ParseError) {

var parseError = <parser.ParseError>e;

console.log("ParseError at index " + parseError.indexInSource + ": " + parseError.message);

}

else {

console.log(e.message);

}

}

The library has two other methods to expose yet. As well as "ParseCss" there is a "ParseLess" function - this applies slightly different rules, largely around the handling of comments (Less supports single line comments that start with "//" whereas CSS only allows the "/* .. */" format).

And then there is the "ExtendedLessParser.ParseIntoStructuredData" method. "ParseCss" and "ParseLess" do a very cheap pass through style content to try to break it down and categorise sections while "ParseIntoStructuredData" takes that data, processes it more thoroughly and returns a hierarchical representation of the styles.

The final type definition becomes

declare module CssParser {

export function ParseCss(content: string): CategorisedCharacterString[];

export function ParseLess(content: string): CategorisedCharacterString[];

export module ExtendedLessParser {

export function ParseIntoStructuredData(

content: string | CategorisedCharacterString[],

optionallyExcludeComments?: boolean

): CssFragment[];

interface CssFragment {

FragmentCategorisation: FragmentCategorisationOptions;

Selectors: string[];

ParentSelectors: string[][];

ChildFragments: CssFragment;

SourceLineIndex: number;

}

export const enum FragmentCategorisationOptions {

Comment = 0,

Import = 1,

MediaQuery = 2,

Selector = 3,

StylePropertyName = 4,

StylePropertyValue = 5

}

}

export interface CategorisedCharacterString {

Value: string;

IndexInSource: number;

CharacterCategorisation: CharacterCategorisationOptions;

}

export const enum CharacterCategorisationOptions {

Comment = 0,

CloseBrace = 1,

OpenBrace = 2,

SemiColon = 3,

SelectorOrStyleProperty = 4,

StylePropertyColon = 5,

Value = 6,

Whitespace = 7

}

export class ParseError implements Error {

constructor(message: string, indexInSource: number);

name: string;

message: string;

indexInSource: number;

}

}

export = CssParser;

The "ExtendedLessParser.ParseIntoStructuredData" nested method is exposed as a function within a nested module. Similarly, the interface and enum for its return type are both nested in there. The method signature is somewhat interesting in that the library will accept either a string being passed into "ParseIntoStructuredData" or the result of a "ParseLess" call. TypeScript has support for this and the method signature indicates that it will accept either "string" or "CategorisedCharacterString[]" (this relies upon "union type" support that became available in TypeScript 1.4). There is also an optional argument to indicate that comments should be excluded from the return content, this is also easy to express in TypeScript (by including the question mark after the argument name).

Limitations

For the module at hand, this covers everything that I needed to do!

However.. while reading up further on type definitions, I did come across one limitation that I think is unfortunate. There is no support for get-only properties on either interfaces or classes. For my CSS Parser, that isn't an issue because I didn't write it in a manner that enforced immutability. But if the CssFragment type (for example) was written with properties that only supported "get" then I might have wanted to write the interface as

interface CssFragment {

get FragmentCategorisation(): FragmentCategorisationOptions;

get Selectors(): string[];

get ParentSelectors(): string[][];

get ChildFragments(): CssFragment;

get SourceLineIndex(): number;

}

But this is not supported. You will get compile errors.

In fairness, this shouldn't be a surprise, since TypeScript does not support properties in interfaces in its regular code; so it's not only within type definitions that it throws its toys out of the pram when you try to do this.

So, instead, you might try to represent that data with a class, since classes do support get-only properties in regular TypeScript. However, if you attempt to write

export class CssFragment {

get FragmentCategorisation(): FragmentCategorisationOptions;

get Selectors(): string[];

get ParentSelectors(): string[][];

get ChildFragments(): CssFragment;

get SourceLineIndex(): number;

}

then you would still receive a compile error

An accessor cannot be declared in an ambient context

Interestingly, this should also not be too surprising (although it surprised me until I looked into it!) since the following code is legal:

class ClassWithGetOnlyName {

get name(): string {

return "Jim";

}

}

var example = new ClassWithGetOnlyName();

example.name = "Bob"; // Setting a property that only has a getter!

alert(example.name);

Here, the alert will show "Jim" since that is what the property getter returns. But it is not illegal to try to set the property (it's just that the "setting" action is effectively ignored). So TypeScript doesn't support the notion of a "get-only" (or "readonly") property.

I think this is unfortunate, considering there are more and more libraries being released that incorporate immutability (Facebook released a library dedicated to immutable collections: immutable-js). There are issues in TypeScript's GitHub repo about this already, albeit with no ready solution available: see Compiler allows assignments to read-only properties and Suggestion: read-only modifier.

If you're writing a library from scratch that has immutable types then you can work around it by returning data from functions instead of properties - eg.

class ClassWithGetOnlyName {

getName(): string {

return "Jim";

}

}

var example = new ClassWithGetOnlyName();

alert(example.getName());

However, if you wanted to write a type definition for an existing library that was intended to return immutable types (that exposed the data through properties) then you would be unable to represent this in TypeScript. Which is a pity.

Which leaves me ending on a bum note when, otherwise, this exercise has been a success! So let's forget the downsides for now and celebrate the achievements instead! The CSS Parser JavaScript port is now available with a TypeScript definition file - hurrah! Everyone should now scurry off and download it from either npm (npmjs.com/package/cssparserjs) or bitbucket.org/DanRoberts/cssparserjs and get parsing!! :)

Posted at 19:19

TypeScript / ES6 classes for React components - without the hacks!

React 0.13 has just been released into beta, a release I've been eagerly anticipating! This has been the release where finally they will be supporting ES6 classes to create React components. Fully supported, no messing about and jumping through hoops and hoping that breaking API changes don't drop in and catch you off guard.

Back in September, I wrote about Writing React components in TypeScript and realised that before I had actually posted it that the version of React I was using was out of date and I would have to re-work it all again or wait until ES6 classes were natively supported (which was on the horizon back then, it's just that there were no firm dates). I took the lazy option and have been sticking to React 0.10.. until now!

Update (16th March 2015): React 0.13 was officially released last week, it's no longer in beta - this is excellent news! There appear to be very little changed since the beta so everything here is still applicable.

Getting the new code

I've got my head around npm, which is the recommended way to get the source. I had a few teething problems a few months ago with first getting going (I need python?? Oh, not that version..) but now everything's rosy. So off I went:

npm install react@0.13.0-beta.1

I saw that the "lib" folder had the source code for the files, the dependencies are all nicely broken up. Then I had a small meltdown and stressed about how to build from source - did I need to run browserify or something?? I got that working, with some light hacking it about, and got to playing around with it. It was only later that I realised that there's also a "dist" folder with built versions - both production (ie. minified) and development. Silly boy.

To start with, I stuck to vanilla JavaScript to play around with it (I didn't want to start getting confused as to whether any problems were with React or with TypeScript with React). The online JSX Compiler can perform ES6 translations as well as JSX, which meant that I could take the example

class HelloMessage extends React.Component {

render() {

return <div>Hello {this.props.name}</div>;

}

}

React.render(<HelloMessage name="Sebastian" />, mountNode);

and translate it into JavaScript (this deals with creating a class, derives it "from React.Component" and it illustrates what the JSX syntax hides - particularly the "React.createElement" call):

var ____Class1 = React.Component;

for (var ____Class1____Key in ____Class1) {

if (____Class1.hasOwnProperty(____Class1____Key)) {

HelloMessage[____Class1____Key] = ____Class1[____Class1____Key];

}

}

var ____SuperProtoOf____Class1 = ____Class1 === null ? null : ____Class1.prototype;

HelloMessage.prototype = Object.create(____SuperProtoOf____Class1);

HelloMessage.prototype.constructor = HelloMessage;

HelloMessage.__superConstructor__ = ____Class1;

function HelloMessage() {

"use strict";

if (____Class1 !== null) {

____Class1.apply(this, arguments);

}

}

HelloMessage.prototype.render = function() {

"use strict";

return React.createElement("div", null, "Hello ", this.props.name);

};

React.render(

React.createElement(HelloMessage, { name: "Sebastian" }),

mountNode

);

I put this into a test page and it worked! ("mountNode" just needs to be a container element - any div that you want to render your content inside).

The derive-class code isn't identical to that you see in TypeScript's output. If you've looked at what TypeScript emits, this might be familiar:

var __extends = this.__extends || function (d, b) {

for (var p in b) if (b.hasOwnProperty(p)) d[p] = b[p];

function __() { this.constructor = d; }

__.prototype = b.prototype;

d.prototype = new __();

};

I tried hacking this in, in place of the inheritance approach from the JSX Compiler and it still worked. I presumed it would, but it's always best to take baby steps if you don't understand it all perfectly - and I must admit that I've been a bit hazy on some of React's terminology around components, classes, elements, factories, whatever.. (despite having read "Introducing React Elements" what feels like a hundred times).

Another wrong turn

In the code above, the arrangement of the line

React.render(

React.createElement(HelloMessage, { name: "Sebastian" }),

mountNode

);

is very important. I must have spent hours earlier struggling with getting it working in TypeScript because I thought it was

React.render(

React.createElement(new HelloMessage({ name: "Sebastian" })),

mountNode

);

It's not.

It it not a new instance passed to "createElement"; it's a type and a properties object. I'm not sure where I got the idea from that it was the other way around - perhaps because I got all excited about it working with classes and then presumed that you worked directly with instances of those classes. Doh.

Time for TypeScript!

Like I said, I've been clinging to my hacked-about way to get TypeScript working with React until now (waiting until I could throw it away entirely, rather than replace it for something else.. which I would then have to throw away entirely when this release turned up). I took a lot of inspiration from code in the react-typescript repo. But that repo hasn't been kept up to date (for the same reason as I had, I believe, that the author knew that it was only going to be required until ES6 classes were supported). There is a link there to typed-react, which seems to have been maintained for 0.12. This seemed like the best place to start.

Update (16th March 2015): With React 0.13's official release, the DefinitelyTyped repo has been updated and now does work with 0.13, I'm leaving the below section untouched for posterity but you might want to skip to the next section "Writing a TypeScript React component" if you're using the DefinitelyTyped definition.

In fact, after some investigation, very little needs changing. Starting with their React type definitions (from the file typings/react/react.d.ts), we need to expose the "React.Component" class but currently that is described by an interface. So the following must be changed -

interface Component<P> {

getDOMNode<TElement extends Element>(): TElement;

getDOMNode(): Element;

isMounted(): boolean;

props: P;

setProps(nextProps: P, callback?: () => void): void;

replaceProps(nextProps: P, callback?: () => void): void;

}

for this -

export class Component<P> {

constructor(props: P);

protected props: P;

}

I've removed isMounted and setProps because they've been deprecated from React. I've also removed the getDOMNode methods since I think they spill out more information than is necessary and I've removed replaceProps since the way that I've been using React I've not seen the use for it - I think it makes more sense to request a full re-render* rather than poke things around. You may not agree with me on these, so feel free to leave them in! Similarly, I've changed the access level of "props" to protected, since I don't think that it should be public information. This requires TypeScript 1.3, which might be why the typed-react version doesn't specify it.

* When I say "re-render", I mean that when some action changes the state of the application, I call React.render again and let the Virtual DOM do it's magic around making this efficient. Plus I'm experimenting at the moment with making the most of immutable data structures and returning false from shouldComponentUpdate where it's clear that the data can't have changed - so the Virtual DOM has less work to do. But that's straying from the point of this post a bit..

Then the external interface needs changing from

interface Exports extends TopLevelAPI {

DOM: ReactDOM;

PropTypes: ReactPropTypes;

Children: ReactChildren;

}

to

interface Exports extends TopLevelAPI {

DOM: ReactDOM;

PropTypes: ReactPropTypes;

Children: ReactChildren;

Component: Component<any>;

}

Quite frankly, I'm not 100% sure why specifying "Component

Writing a TypeScript React component

So now we can write this:

import React = require('react');

interface Props { name: string; role: string; }

class PersonDetailsComponent extends React.Component<Props> {

constructor(props: Props) {

super(props);

}

public render() {

return React.DOM.div(null, this.props.name + " is a " + this.props.role);

}

}

function Factory(props: Props) {

return React.createElement(PersonDetailsComponent, props);

}

export = Factory;

Note that we are able to specify a type param for "React.Component" and, when you edit this in TypeScript, "this.props" is correctly identified as being of that type.

Update (16th March 2015): If you are using the DefinitelyTyped definitions then you need to specify both "Props" and "State" type params (I recommend that Component State never be used and that it always be specified as "{}", but that's out of the scope of this post) - ie.

class PersonDetailsComponent extends React.Component<Props, {}> {

The pattern I've used is to declare a class that is not exported. Rather, a "Factory" function is made available to the world. This is to prevent the problem that I described earlier - originally I was exporting the class and was trying to call

React.render(

React.createElement(new PersonDetailsComponent({

name: "Bob",

role: "Mouse catcher"

})),

mountNode

);

but this does not work. The correct approach is to export a Factory method and then to consume the component thusly:

React.render(

PersonDetailsComponent({

name: "Bob",

role: "Mouse catcher"

}),

this._renderContainer

);

Thankfully, the render method is specified in the type definition as

render<P>(

element: ReactElement<P>,

container: Element,

callback?: () => void

): Component<P>;

so, if you forget to apply the structure of non-exported-class / exported-Factory-method and tried to export the class and new-one-up and pass it to "React.render" directly, you would get a compile error such as

Argument of type 'PersonDetailsComponent' is not assignable to parameter of type 'ReactElement<Props>'

I do love it when the compiler can pick up on your silly mistakes!

Update (16th March 2015): Again, there is a slight difference between the typed-react definition that I was originally using and the now-updated DefinitelyTyped repo version. With DefinitelyTyped, the render method is specified as:

render<P, S>(

element: ReactElement<P>,

container: Element,

callback?: () => any

): Component<P, S>

but the meaning is much the same.

Migration plan

The hacky way I've been working until now did allow instances of component classes to be used, so migrating over is going to require some boring mechanical work to change them - and to add Factory methods to each component. But, since they all shared a common base class (the "ReactComponentBridge"), it also shouldn't be too much work to change those base classes to "React.Component" in one search-and-replace. And there aren't too many other breaking changes to worry about. I was using "setProps" earlier on in development but I've already gotten rid of all those - so I'm optimistic that moving over to 0.13 isn't going to be too big of a deal.

It's worth bearing in mind that 0.13 is still in beta at the moment, but it seems like the changes that I'm interested in here are unlikely to vary too much between now and the official release. So if I get cracking, maybe I can finish migrating not long after it's officially here - instead of being stuck a few releases behind!

Posted at 01:02

TypeScript classes for (React) Flux actions

I've been playing with React over the last few months and I'm still a fan. I've followed Facebook's advice and gone with the "Flux" architecture (there's so many good articles about this out there that I couldn't even decide which one to link to) but I've been writing the code using TypeScript. So far, most of my qualms with this approach have been with TypeScript rather than React; I don't like the closing-brace formatting that Visual Studio does and doesn't let you change, its generics system is really good but not quite as good as I'd like (not as good as C#'s, for example, and I sometimes wish generic type params were available at runtime for testing but I do understand why they're not). I wish the "Allow implicit 'any' types" option defaulted to unchecked rather than checked (I presume this is to encourage "gradual typing" but if I'm using TypeScript I'd rather go whole-hog).

But what I thought were going to be the big problems with it haven't been, really - type definitions and writing the components (though I am using a bit of a hack that relies upon an older version of React - I'm hoping to change this when 0.13 comes out and introduces better support for ES6 classes).

Writing the components in "pure" TypeScript results in more code than jsx.. it's not the end of the world, but something that would combine the benefits of both (strong typing and succint jsx format) would be wonderful. There are various possibilities that I believe people are looking into, from modifying the TypeScript compiler to support jsx to the work that Facebook themselves are doing around "Flow" which "Adds static typing to JavaScript to improve developer productivity and code quality". Neither of these are ready for me to integrate into Visual Studio, which I'm still using since I like it so much for my other development work.

What I want to talk about today, though, is one of the ways that TypeScript's capabilities can make a nice tweak to how the Flux architecture may be realised. Hopefully the following isn't blindly obvious and well-known, I failed to find any other posts out there explaining it so I'm going to try to take credit for it! :)

As recommended and apparently done by everyone..

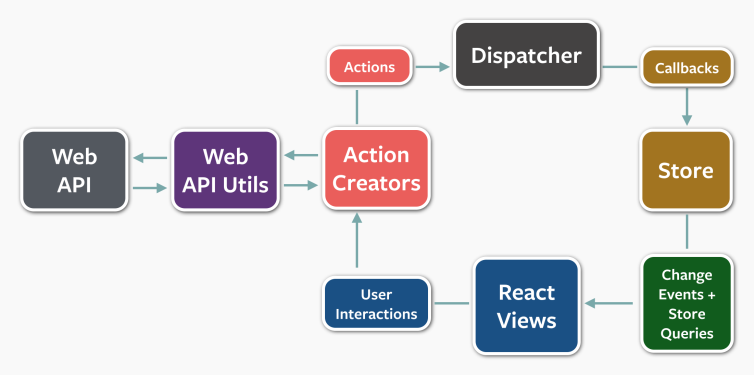

Here's the diagram that everyone who's looked into Flux will have seen many times before (since I've nicked it straight from the React blog's post about it) -

In the middle are the "Action Creators", which create objects that represent actions (and any associated data) so that the Dispatcher has something to send out. Stores listen for these actions - checking whether a given action is one that they're interested in and extracting the information from it as required.

As a concrete example, here is how actions are created in Facebook's "TODO" example (from their repo on GitHub):

/*

* Copyright (c) 2014, Facebook, Inc.

* All rights reserved.

*

* This source code is licensed under the BSD-style license found in the

* LICENSE file in the root directory of this source tree. An additional grant

* of patent rights can be found in the PATENTS file in the same directory.

*

* TodoActions

*/

var AppDispatcher = require('../dispatcher/AppDispatcher');

var TodoConstants = require('../constants/TodoConstants');

var TodoActions = {

/**

* @param {string} text

*/

create: function(text) {

AppDispatcher.dispatch({

actionType: TodoConstants.TODO_CREATE,

text: text

});

},

/**

* @param {string} id The ID of the ToDo item

* @param {string} text

*/

updateText: function(id, text) {

AppDispatcher.dispatch({

actionType: TodoConstants.TODO_UPDATE_TEXT,

id: id,

text: text

});

},

/**

* Toggle whether a single ToDo is complete

* @param {object} todo

*/

toggleComplete: function(todo) {

var id = todo.id;

if (todo.complete) {

AppDispatcher.dispatch({

actionType: TodoConstants.TODO_UNDO_COMPLETE,

id: id

});

} else {

AppDispatcher.dispatch({

actionType: TodoConstants.TODO_COMPLETE,

id: id

});

}

},

/**

* Mark all ToDos as complete

*/

toggleCompleteAll: function() {

AppDispatcher.dispatch({

actionType: TodoConstants.TODO_TOGGLE_COMPLETE_ALL

});

},

/**

* @param {string} id

*/

destroy: function(id) {

AppDispatcher.dispatch({

actionType: TodoConstants.TODO_DESTROY,

id: id

});

},

/**

* Delete all the completed ToDos

*/

destroyCompleted: function() {

AppDispatcher.dispatch({

actionType: TodoConstants.TODO_DESTROY_COMPLETED

});

}

};

module.exports = TodoActions;

Every action has an "actionType" property. Some have an "id" property, some have a "text" property, some have both, some have neither. Other examples I've seen follow a similar pattern where the ActionCreator (or ActionCreators, since sometimes there are multiple - as in the chat example in that same Facebook repo) is what is responsible for knowing how data is represented by each action. Stores assume that if the "actionType" is what they expect then all of the other properties they expect to be associated with that action will be present.

Here's a snippet I've taken from another post:

var action = payload.action;

switch(action.actionType){

case AppConstants.ADD_ITEM:

_addItem(payload.action.item);

break;

case AppConstants.REMOVE_ITEM:

_removeItem(payload.action.index);

break;

case AppConstants.INCREASE_ITEM:

_increaseItem(payload.action.index);

break;

case AppConstants.DECREASE_ITEM:

_decreaseItem(payload.action.index);

break;

}

Some actions have an "item" property, some have an "index". The ActionCreator was responsible for correctly populating data appropriate to the "actionType".

Types, types, types

When I first start writing code like this for my own projects, it felt wrong. Wasn't I using TypeScript so that I had a nice reassuring type safety net to protect me against my own mistakes?

Side note: For me, this is one of the best advantages of "strong typing", the fact the compiler can tell me if I've mistyped a property or argument, or if I want to change the name of one of them then the compiler can change all references rather than it being a manual process. The other biggie for me is how beneficial it can be in helping document APIs (both internal and external) - for other people using my code.. or just me when it's been long enough that I can't remember all of the ins and outs of what I've written! These are more important to me than getting worried about whether "static languages" can definitely perform better than "dynamic" ones (let's not open that can of worms).

Surely, I asked myself, if these objects have properties that vary based upon an "actionType" magic string, these would be better expressed as actual types? Like classes?

Working from the example above, there would be classes such as:

class AddItemAction {

constructor(private _index: number) { }

get index() {

return this._index;

}

}

export = AddItemAction;

I'm a fan of the AMD pattern so I would have a separate file per action class and then explicitly "import" (in TypeScript terms) them into Stores that reference them. The main reason I'm leaning towards the AMD pattern is that you can use require.js to load in the script required to render the first "page" and then dynamically load in additional script as more functionality of the application is used. This should avoid the risk of the dreaded multi-megabyte initial download (and the associated delays). I'm still proving this to myself - it's looking very promising so far but I haven't written any multi-megabyte applications yet!

I also like things to be immutable, otherwise the above could have been shortened even further to:

class AddItemAction {

constructor(public index: number) { }

}

export = AddItemAction;

But, technically, this could lead to one Store changing data in an action, which could affect what another Store does with the data. An effect that would only happen if that first Store received the action before the second one. Yuck. I don't imagine anyone would want to do something like that but immutability means that it's not even possible, even by accident (especially by accident).

So if there were classes for each action then the listening code would look more like this:

if (action instanceof AddItemAction) {

this._addItem(action);

}

if (action instanceof RemoveItemAction) {

this._removeItem(action);

}

if (action instanceof IncreaseItemAction) {

this._increaseItem(action);

}

if (action instanceof DecreaseItemAction) {

this._decreaseItem(action);

}

I prefer to have the functions receive the actual action. The AddItemAction instance is passed to the "_addItem" function, for example, rather than just the "index" property value - eg.

private _addItem(action: AddItemAction) {

// Do whatever..

}

This is at least partly because it makes the type comparing code more succinct - the "action" reference will be of type "any" (as will be seen further on in this post) and so TypeScript lets us pass it straight in to methods such as _addItem since it presumes that if it's "any" then it can be used anywhere, even as an function argument that has a specific type annotation. The type check that is made before _addItem is called gives us the confidence that the data is appropriate to pass to _addItem, the TypeScript compiler will then happily take our word for it.

Update (25th February 2015): A couple of people in the comments suggested that the action property on the payload should implement an interface to "mark" it as an action. This is something I considered originally but I dismissed it and I think I'm going to continue to dismiss it for the following reason: the interface would be "empty" since there is no property or method that all actions would need to share. If this were C# then every action class would have to explicitly implement this "empty interface" and so we could do things like search for all implementation of IAction within a given project or binary. In TypeScript, however, interfaces may be implemented implicitly ("TypeScript is structural"). This means that any object may be considered to have (implicitly) implemented IAction, if IAction is an empty interface. And this means that there would be no reliable way to search for implementations of IAction in a code base. You could search for classes that explicitly implement it, but if you have to rely upon people to follow the convention of decorating all action classes with a particular interface then you might as well rely on a simpler convention such as keeping all actions within files under an "action" folder.

Server vs User actions

Another concept that this works well with is one that I think I first read at Atlassian's blog: Flux Step By Step - the idea of identifying a given action as originating from a view (from a user interaction, generally) or from the server (such as an ajax callback).

They suggested the use of an AppDispatcher with two distinct methods, each wrapping an action up with an appropriate "source" value -

var AppDispatcher = copyProperties(new Dispatcher(), {

/**

* @param {object} action The details of the action, including the action's

* type and additional data coming from the server.

*/

handleServerAction: function(action) {

var payload = {

source: 'SERVER_ACTION',

action: action

};

this.dispatch(payload);

},

/**

* @param {object} action The details of the action, including the action's

* type and additional data coming from the view.

*/

handleViewAction: function(action) {

var payload = {

source: 'VIEW_ACTION',

action: action

};

this.dispatch(payload);

}

});

Again, these are "magic string" values. I like the idea, but TypeScript has the tools to do better.

I have a module with an enum for this:

enum PayloadSources {

Server,

View

}

export = PayloadSources;

and then an AppDispatcher of my own -

import Dispatcher = require('third_party/Dispatcher/Dispatcher');

import PayloadSources = require('constants/PayloadSources');

import IDispatcherMessage = require('dispatcher/IDispatcherMessage');

var appDispatcher = (function () {

var _dispatcher = new Dispatcher();

return {

handleServerAction: function (action: any): void {

_dispatcher.dispatch({

source: PayloadSources.Server,

action: action

});

},

handleViewAction: function (action: any): void {

_dispatcher.dispatch({

source: PayloadSources.View,

action: action

});

},

register: function (callback: (message: IDispatcherMessage) => void): string {

return _dispatcher.register(callback);

},

unregister: function (id: string): void {

return _dispatcher.unregister(id);

},

waitFor: function (ids: string[]): void {

_dispatcher.waitFor(ids);

}

};

} ());

// This is effectively a singleton reference, as seems to be the standard pattern for Flux

export = appDispatcher;

The IDispatcherMessage is very simple:

import PayloadSources = require('constants/PayloadSources');

interface IDispatcherMessage {

source: PayloadSources;

action: any

}

export = IDispatcherMessage;

This allows me to listen for actions with code thusly -

AppDispatcher.register(message => {

var action = message.action;

if (action instanceof AddItemAction) {

this._addItem(action);

}

if (action instanceof RemoveItemAction) {

this._removeItem(action);

}

// etc..

Now, if I come across a good reason to rename the "index" property on the AddItemAction class, I can perform a refactor action that will fix it everywhere. If I don't use the IDE to perform the refactor, and just change the property name in one place, then I'll get TypeScript compiler errors about an "index" property that no longer exists.

The mysterious Dispatcher

One thing I skimmed over in the above is what the "third_party/Dispatcher/Dispatcher" component is. The simple answer is that I took the Dispatcher.js file from the Flux repo and messed about with it a tiny bit to get it to compile as TypeScript with my preferred disabling of the option "Allow implicit 'any' types". In case this is a helpful place for anyone to start, I've put the result up on pastebin as TypeScript Flux Dispatcher, along with the required support class TypeScript Flux Dispatcher - invariant support class.

Final notes

I'm still experimenting with React and Flux but this is one of the areas that I've definitely been happy with. I like the Flux architecture and the very clear way in which interactions are handled (and the clear direction of flow of information). Describing the actions with TypeScript classes feels very natural to me. It might be that I start grouping multiple actions into a single module as my applications get bigger, but for now I'm fine with one per file.

The only thing I'm only mostly happy with is my bold declaration in the AppDispatcher class; "This is effectively a singleton reference, as seems to be the standard pattern for Flux". It's not the class that's exported from that module, it's an instance of the AppDispatcher which is used by everything in the app. This makes sense in a lot of ways, since it needs to be used in so many places; there will be various Stores that register to listen to it but there are likely to be many, many React components, any one of which could accept some sort of interaction that requires an action be created (and so be sent to the AppDispatcher). One alternative approach would be to use dependency injection to pass an AppDispatcher through every component that might need it. In fact, I did try that in one early experiment but found it extremely cumbersome, so I'm happy to settle for what I've got here.

However, the reason (one of, at least!) that singletons got such a bad name is that they can making unit testing very awkward. I'm still in the early phases of investigating what I think is the best way to test a React / Flux application (there are a lot of articles out there explaining good ways to tackle it and I'm trying to work my way through some of their ideas). One thing that I'm contemplating, particularly for testing simple React components, is to take advantage of the fact that I'm using AMD everywhere and to try changing the require.js configuration for tests - for any given test, when an AppDispatcher is requested, some sort of mock object could be provided in its place.

This would have the two main benefits that it could expose convenient methods to confirm that a particular action was raised following a given interaction (which may be the main point of that particular test) but also that there would be no shared state that needs resetting between tests; each test would provide its own AppDispatcher stand-in. I've not properly explored this yet, it's still in the idea phase, but I think it also has promise. And - if it all goes to plan - it's another reason way for me to convince myself that AMD loading within TypeScript is the way to go!

Posted at 22:24

Writing React components in TypeScript

Whoops.. I started writing this post a while ago and have only just got round to finishing it off. Now I realise that it applies to React 0.10 but the code here doesn't work in the now-current 0.11. On top of this, hopefully this will become unnecessary when 0.12 is released. I talk about this in the last part of the post. But until 0.12 is out (and confirmed to address the problem), I'm going to stick to 0.10 and use the solution that I talk about here.

Update (29th January 2015): React 0.13 beta has been released and none of this workaround is required any more - I've written about it here: TypeScript / ES6 classes for React components - without the hacks!

I've been playing around with React recently, putting together some prototypes to try to identify any pitfalls in what I think is an excellent idea and framework, with a view to convincing everyone else at work that we should consider it for new products. I'm no JavaScript hater but I do strongly believe in strongly typed code being easier to maintain in the long run for projects of significant size. Let's not get into an argument about whether strong or "weak" typing is best - before we know it we could end up worrying about what strongly typed even means! If you don't agree with me then you probably don't see any merit to TypeScript and you probably already guessed that this post will not be of interest to you! :)

So I wanted to try bringing together the benefits of React with the benefits of TypeScript.. I'm clearly not the only one since there is already a type definition available in NuGet: React.TypeScript.DefinitelyTyped. This seems to be the recommended definition and appears to be in active development. I'd love it even more if there was an official definition from Facebook themselves (they have one for their immutable-js library) but having one here is a great start. This allows us to call methods in the React library and know what types the arguments should be and what they will return (and the compiler will tell us if we break these contracts by passing the wrong types or trying to mistreat the return values).

However, there are a few problems. Allow me to venture briefly back to square one..

Back to basics: A React component

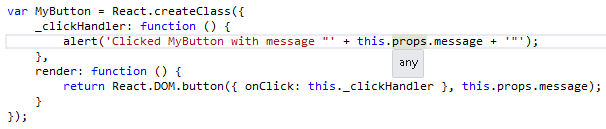

This is a very simple component in React -

var MyButton = React.createClass({

_clickHandler: function() {

alert('Clicked MyButton with message "' + this.props.message + '"');

},

render: function() {

return <button onClick={this._clickHandler}>{this.props.message}</button>;

}

});

It's pretty boring but it illustrates a few principles. Firstly, it's written in "jsx" - a format like JavaScript but that needs some processing to actually become JavaScript. The <button> declaration looks like html, for example, and needs altering to become real JavaScript. If we're going to write components in TypeScript then we can't use this format since Visual Studio doesn't understand it (granted I'm making a bit of a leap assuming that you're using Visual Studio for this - it's not necessary, but I suspect most people writing TypeScript will use it since the TypeScript support is so good).

The good news is that the translation from "jsx" to JavaScript is not a complex one*. It results in slightly longer code but it's still easily readable (and writable). So the above would be, written in native JavaScript -

var MyButton = React.createClass({

_clickHandler: function() {

alert('Clicked MyButton with message "' + this.props.message + '"');

},

render: function() {

return React.DOM.button({ onClick: this._clickHandler }, this.props.message);

}

});

* (It can do other clever stuff like translate "fat arrow" functions into JavaScript that is compatible with older browsers, but let's not get bogged down with that here - since I want to use TypeScript rather than jsx, it's not that relevant right now).

This simple example is illustrating something useful that can be taken for granted since React 0.4; autobinding. When "_clickHandler" is called, the "this" reference is bound to the component instance, so "this.props.message" is accessible. Before 0.4, you had to use the "React.autoBind" method - eg.

var MyButton = React.createClass({

_clickHandler: React.autoBind(function() {

alert('Clicked MyButton with message "' + this.props.message + '"');

}),

render: function() {

return React.DOM.button({ onClick: this._clickHandler }, this.props.message);

}

});

but these days it just works as you would expect (or as you would hope, perhaps). This happened back in July 2013 - see New in React v0.4: Autobind by Default.

A TypeScript React component: Take 1

If we naively try to write TypeScript code that starts off with the JavaScript above then we find we have no intellisense. The editor has no idea about "this.props" - no idea that it is defined, certainly no idea that it has a property "message" that should be a string. This shouldn't really be a surprise since the "this" in this case is just an anonymous object that we're passing to "React.createClass"; no information about the type has been specified, so it is considered to be of type "any".

If we continue like this then we're going to miss out on the prime driver for using TypeScript in the first place - we might as well just write the components in JavaScript or "jsx"! (In fairness, this is something that I considered.. with React, and particularly the recommended Flux architecture, the "view components" are a relatively thin layer over components that could easily be written in TypeScript and so benefit from being strongly typed.. the view components could remain "more dynamic" and be covered by the class of unit tests that are often used to cover cases that are impossible with the guarantees of strong typing).

The obvious thing to try was to have a TypeScript class along the lines of

class MyButton {

props: { message: string };

private _clickHandler() {

alert('Clicked MyButton with message "' + this.props.message + '"');

}

public render() {

return React.DOM.button({ onClick: this._clickHandler }, this.props.message);

}

}

var MyButtonReactComponent = React.createClass(new MyButton());

This would solve the internal type specification issue (where "this" is "any"). However, when the "React.createClass" function is called at runtime, an error is thrown..

Error: Invariant Violation: createClass(...): Class specification must implement a

rendermethod.

I'm not completely sure, but I suspect that the React framework code is expecting an object with a property that is a function named "render" while the class instance passed to it has a function "render" on its prototype rather than a property on the reference itself.

Looking for help elsewhere

When I got to this point, I figured that someone else must have had encountered the same problem - particularly since there exists this TypeScript definition for React in the first place! I came across a GitHub project React TypeScript which describes itself as a

React wrapper to make it play nicely with typescript.

An example in the README shows

import React = require('react');

import ReactTypescript = require('react-typescript');

class HelloMessage extends ReactTypescript.ReactComponentBase<{ name: string; }, {}> {

render() {

return React.DOM.div(null, 'Hello ' + this.props.name);

}

}

React.renderComponent(new HelloMessage({ name: 'Jhon' }), mountNode);

which looks like exactly what I want!

The problems are that it clearly states..

warning: ReactTypescript can actually only be used with commonjs modules and browserify, if someone does want AMD I'll gladly accept any PR that would packages it for another format.

.. and I'm very interesting in using AMD and require.js to load modules "on demand" (so that if I develop a large app then I have a way to prevent the "megabyte-plus initial JavaScript download").

Also, I'm concerned that the maintained TypeScript definition that I referenced earlier claims to be

Based on TodoMVC sample by @fdecampredon, improved by @wizzard0, MIT licensed.

fdecampredon is the author of this "React TypeScript" repo.. which hasn't been updated in seven months. So I'm concerned that the definitions might not be getting updated here - there are already a lot of differences between the react.d.ts in this project and that in the maintained NuGet package's react.d.ts.

In addition to this, the README states that

In react, methods are automatically bound to a component, this is not the case when using ReactTypeScript, to activate this behaviour you can use the autoBindMethods function of ReactTypeScript

This refers to what I talked about earlier; the "auto-binding" convenience to make writing components more natural. There are two examples of ways around this. You can use the ReactTypeScript library's "autoBindMethods" function -

class MyButton extends ReactTypeScript.ReactComponentBase<{ message: string}, any> {

clickHandler(event: React.MouseEvent) {

alert(this.props.message);

}

render() {

return React.DOM.button({ onClick: this.clickHandler }, 'Click Me');

}

}

// If this isn't called then "this.props.message" will error in clickHandler as "this" is not

// bound to the instance of the class

ReactTypeScript.autoBindMethods(MyButton);

or you can use the TypeScript "fat arrow" to bind the function to the "this" reference that you would expect:

class MyButton extends ReactTypeScript.ReactComponentBase<{ message: string}, any> {

// If the fat arrow isn't used for the clickHandler definition then "this.props.message" will

// error in clickHandler as "this" is not bound to the instance of the class

clickHandler = (event: React.MouseEvent) => {

alert(this.props.message);

}

render() {

return React.DOM.button({ onClick: this.clickHandler }, 'Click Me');

}

}

The first approach feels a bit clumsy, that you must always remember to call this method for all component classes. The second approach doesn't feel too bad, it's just a case of being vigilant and always using fat arrows - but if you forget, you won't find out until runtime. Considering that I want to use to TypeScript to catch more errors at compile time, this still doesn't feel ideal.

The final concern I have is that the library includes a large-ish react-internal.js file. What I'm going to suggest further down does unfortunately dip its toe into React's (undocumented) internals but I've tried to keep it to the bare minimum. This "react-internal.js" worries me as it might be relying on a range of implementation details, any of which (as far as I know) could potentially change and break my code.

In case I'm sounding down on this library, I don't mean to be - I've tried it out and it does actually work, and there are not a lot of successful alternatives out there. So I've got plenty of respect for this guy, getting his hands dirty and inspiring me to follow in his footsteps!

Stealing Taking inspiration - A TypeScript React component: Take 2

So I want a way to

- Write a TypeScript class that can be used as a React component

- Use the seemingly-maintained NuGet-delivered type definition and limit access to the "internals" as much as possible

- Have the component's methods always be auto-bound

I'd better say this up-front, though: I'm willing to sacrifice the support for mixins here.

fdecampredon's "React TypeScript" library does support mixins so it's technically possible but I'm not convinced at this time that they're worth the complexity required by the implementation since I don't think they fit well with the model of a TypeScript component.

The basic premise is that you can name mixin objects which are "merged into" the component code, adding properties such as functions that may be called by the component's code. Since TypeScript wouldn't be aware of the properties added by mixins, it would think that there were missing methods / properties and flag them as errors if they were used within the component.

On top of this, I've not been convinced by the use cases for mixins that I've seen so far. In the official React docs section about mixins, it uses the example of a timer that is automatically cleared when the component is unmounted. There's a question on Stack Overflow "Using mixins vs components for code reuse in Facebook React" where the top answer talks about using mixins to perform common form validation work to display errors or enable or disable inputs by directly altering internal state on the component. As I understand the Flux architecture, the one-way message passing should result in validation being done in the store rather than the view / component. This allows the validation to exist in a central (and easily-testable) location and to not exist in the components. This also goes for the timer example, the logic-handling around whatever events are being raised on a timer should not exist within the components.

What I have ended up with is this:

import React = require('react');

// The props and state references may be passed in through the constructor

export class ReactComponentBase<P, S> {

constructor(props?: P, state?: S) {

// Auto-bind methods on the derived type so that the "this" reference is as expected

// - Only do this the first time an instance of the derived class is created

var autoBoundTypeScriptMethodsFlagName = '__autoBoundTypeScriptMethods';

var autoBindMapPropertyName = '__reactAutoBindMap'; // This is an internal React value

var cp = this['constructor'].prototype;

var alreadyBoundTypeScriptMethods = (cp[autoBoundTypeScriptMethodsFlagName] === true)

&& cp.hasOwnProperty(autoBoundTypeScriptMethodsFlagName);

if (!alreadyBoundTypeScriptMethods) {

var autoBindMap = {};

var parentAutoBindMap = cp[autoBindMapPropertyName];

var functionName;

if (parentAutoBindMap) {

// Maintain any binding from an inherited class (if the current class being dealt

// with doesn't directly inherit from ReactComponentBase)

for (functionName in parentAutoBindMap) {

autoBindMap[functionName] = parentAutoBindMap[functionName];

}

}

for (functionName in cp) {

if (!cp.hasOwnProperty(functionName) || (functionName === "constructor")) {

continue;

}

var fnc = cp[functionName];

if (typeof (fnc) !== 'function') {

continue;

}

autoBindMap[functionName] = fnc;

}

cp[autoBindMapPropertyName] = autoBindMap;

cp[autoBoundTypeScriptMethodsFlagName] = true;

}

this['construct'].apply(this, arguments); // This is an internal React method

}

props: P;

state: S;

}

ReactComponentBase.prototype = React.createClass({

// The component must share the "componentConstructor" that is present on the prototype of

// the return values from React.createClass

render: function () {

return null;

}

})['componentConstructor'].prototype; // Also an internal React method

// This must be used to mount component instances to avoid errors due to the type definition

// expecting a React.ReactComponent rather than a ReactComponentBase (though the latter is

// able to masquerade as the former and when the TypeScript compiles down to JavaScript,

// no-one will be any the wiser)

export function renderComponent<P, S>(

component: ReactComponentBase<P, S>,

container: Element,

callback?: () => void) {

var mountableComponent = <React.ReactComponent<any, any>><any>component;

React.renderComponent(

mountableComponent,

container,

callback

);

}

This allows the following component to be written:

import React = require('react');

import ReactComponentBridge = require('components/ReactComponentBridge');

class MyButton extends ReactComponentBridge.ReactComponentBase<{ message: string }, any> {

myButtonClickHandler(event: React.MouseEvent) {

alert('Clicked MyButton with message "' + this.props.message + '"');

}

render() {

return React.DOM.button({ onClick: this.myButtonClickHandler }, 'Click Me');

}

}

export = MyButton;

which may be rendered with:

import ReactComponentBridge = require('components/ReactComponentBridge');

import MyButton = require('components/MyButton');

ReactComponentBridge.renderComponent(

new MyButton({ message: 'Click Me' }),

document.getElementById('container')

);

Hurrah! Success! All is well with the world! I've got the benefits of TypeScript and the benefits of React and the Flux architecture (ok, the last one doesn't need any of this or even require React - it could really be used with whatever framework you chose). There's just one thing..

I'm out of date

Like I said at the start of this post, as I got to rounding it out to publish, I realised that I wasn't on the latest version of React (current 0.11.2, while I was still using 0.10) and that this code didn't actually work on that version. Sigh.

However, the good news is that it sounds like 0.12 (still in alpha at the moment) is going to make things a lot easier. The changes in 0.11 appear to be paving the way for 0.12 to shakes things up a bit. Changes are documented at New React Descriptor Factories and JSX which talks about how the problem they're trying to solve with the new code is a

Simpler API for ES6 classes

.. and there is a note in the react-future GitHub repo ("Specs & docs for potential future and experimental React APIs and JavaScript syntax") that

A React component module will no longer export a helper function to create virtual elements. Instead it's the responsibility of the consumer to efficiently create the virtual element.

Languages that compile to JS can choose to implement the element signature in whatever way is idiomatic for that language:

TypeScript implements some ES6 features (such as classes, which are how I want to represent React components) so (hopefully) this means that soon-to-hit versions of React are going to make ES6-classes-for-components much easier (and negate the need for a workaround such as is documented here).

The articles that I've linked to (I'm not quite sure how official that all is, btw!) are talking about a future version since they refer to the method "React.createFactory", which isn't available in 0.11.2. I have cloned the in-progress master repo from github.com/facebook/react and built the 0.12-alpha code* and that does have that method. However, I haven't yet managed to get it working as I was hoping. I only built it a couple of hours ago, though, and I want to get this post rounded out rather than let it drag on any longer! And, I'm sure, when this mechanism for creating React components is made available, I'm sure a lot of information will be released about it!

* (npm is a great tool but it still can't make everything easy.. first I didn't realise that the version of node.js I was using was out of date and it prevented some dependencies from being installed. Then I had to install Python - but 2.7 was required, I found out, after I'd installed 3.4. Then I didn't have Git installed on the computer I was trying to build React from. Then I had to mess about with setting environment variables for the Python and Git locations. But it did work, and when I think about how difficult it would have been without a decent package manager I stop feeling the need to complain about it too much :)

Posted at 23:12

The C# CSS Parser in JavaScript

I was talking last month (in JavaScript dependencies that work with Brackets, Node and in-browser) about Adobe Brackets and how much I'd been enjoying giving it a try - and how its extensions are written in JavaScript.

Well this had made me ambitious and wondering whether I could write an extension that would lint LESS stylesheets according to the rules I proposed last year in "Non-cascading CSS: A revolution!" - rules which have now been put into use on some major UK tourism destination websites through my subtle influence at work (and, granted, the Web Team Leader's enthusiasm.. but it's my blog so I'm going to try to take all the credit I can :) We have a LESS processor that applies these rules, the only problem is that it's written in C# and so can't easily be used by the Brackets editor.

But in the past I've rewritten my own full text-indexer into JavaScript so translating my C# CSSParser shouldn't be too big of a thing. The main processing is described by a state machine - I published a slightly rambling explanation in my post Parsing CSS which I followed up with C# State Machines, that talks about the same topic but in a more focused manner. This made things really straight forward for translation.

When parsing content and categorising a sequence of characters as a Comment or a StylePropertyValue or whatever else, there is a class that represents the current state and knows what character(s) may result in a state change. For example, a single-line-comment processor only has to look out for a line return and then it may return to whatever character type it was before the comment started. A multi-line comment will be looking out for the characters "*/". A StylePropertyValue will be looking out for a semi-colon or a closing brace, but it also needs to look for quote characters that indicate the start of a quoted section - within this quoted content, semi-colons and closing braces do not indicate the end of the content, only a matching end quote does. When this closing quote is encountered, the logic reverts back to looking for a semi-colon or closing brace.

Each processor is self-contained and most of them contain very little logic, so it was possible to translate them by just taking the C# code, pasting it into a JavaScript file, altering the structure to be JavaScript-esque and removing the types. As an example, this C# class

public class SingleLineCommentSegment : IProcessCharacters

{

private readonly IGenerateCharacterProcessors _processorFactory;

private readonly IProcessCharacters _characterProcessorToReturnTo;

public SingleLineCommentSegment(

IProcessCharacters characterProcessorToReturnTo,

IGenerateCharacterProcessors processorFactory)

{

if (processorFactory == null)

throw new ArgumentNullException("processorFactory");

if (characterProcessorToReturnTo == null)

throw new ArgumentNullException("characterProcessorToReturnTo");

_processorFactory = processorFactory;

_characterProcessorToReturnTo = characterProcessorToReturnTo;

}

public CharacterProcessorResult Process(IWalkThroughStrings stringNavigator)

{

if (stringNavigator == null)

throw new ArgumentNullException("stringNavigator");

// For single line comments, the line return should be considered part of the comment content

// (in the same way that the "/*" and "*/" sequences are considered part of the content for

// multi-line comments)

var currentCharacter = stringNavigator.CurrentCharacter;

var nextCharacter = stringNavigator.CurrentCharacter;

if ((currentCharacter == '\r') && (nextCharacter == '\n'))

{

return new CharacterProcessorResult(

CharacterCategorisationOptions.Comment,

_processorFactory.Get<SkipCharactersSegment>(

CharacterCategorisationOptions.Comment,

1,

_characterProcessorToReturnTo

)

);

}

else if ((currentCharacter == '\r') || (currentCharacter == '\n')) {

return new CharacterProcessorResult(

CharacterCategorisationOptions.Comment,

_characterProcessorToReturnTo

);

}

return new CharacterProcessorResult(CharacterCategorisationOptions.Comment, this);

}

}

becomes

var getSingleLineCommentSegment = function (characterProcessorToReturnTo) {

var processor = {

Process: function (stringNavigator) {

// For single line comments, the line return should be considered part of the comment content

// (in the same way that the "/*" and "*/" sequences are considered part of the content for

// multi-line comments)

if (stringNavigator.DoesCurrentContentMatch("\r\n")) {

return getCharacterProcessorResult(

CharacterCategorisationOptions.Comment,

getSkipNextCharacterSegment(

CharacterCategorisationOptions.Comment,

characterProcessorToReturnTo

)

);

} else if ((stringNavigator.CurrentCharacter === "\r")

|| (stringNavigator.CurrentCharacter === "\n")) {

return getCharacterProcessorResult(

CharacterCategorisationOptions.Comment,

characterProcessorToReturnTo

);

}

return getCharacterProcessorResult(

CharacterCategorisationOptions.Comment,

processor

);

}

};

return processor;

};

There are some concessions I made in the translation. Firstly, I tend to be very strict with input validation in C# (I long for a world where I can replace it all with code contracts but the last time I looked into the .net work done on that front it didn't feel quite ready) and try to rely on rich types to make the compiler work for me as much as possible (in both documenting intent and catching silly mistakes I may make). But in JavaScript we have no types to rely on and it seems like the level of input validation that I would perform in C# would be very difficult to replicate as reliably without them. Maybe I'm rationalising, but while searching for a precedent for this sort of thing, I came across the article Error Handling in Node.js which distinguishes between "operational" and "programmer" errors and states that

Programmer errors are bugs in the program. These are things that can always be avoided by changing the code. They can never be handled properly (since by definition the code in question is broken).

One example in the article is

passed a "string" where an object was expected

Since the "getSingleLineCommentSegment" function shown above is private to the CSS Parser class that I wrote, it holds true that any invalid arguments passed to it would be programmer error. So in the JavaScript version, I've been relaxed around this kind of validation. Not, mind you, that this means that I intend to start doing the same thing in my C# code - I still think that where static analysis is possible that every effort should be used to document in the code what is right and what is wrong. And while (without relying on some of the clever stuff I believe that is in the code contracts work that Microsoft has done) argument validation exceptions can't contribute to static analysis, I do still see it as documentation for pre-compile-time.

Another concession I made was that in the C# version I went to effort to ensure that processors could be re-used if their configuration was identical - so there wouldn't have to be a new instances of a SingleLineCommentSegment processor for every single-line comment encountered. A "processorFactory" would new up an instance if an existing instance didn't already exist that could be used. This was really an optimisation that was intended for parsing huge amounts of content, as were some of the other decisions made in the C# version - such as the strict use of IEnumerable with only very limited read-ahead (so if the input was being read from a stream, for example, only a very small part of the stream's data need be in memory at any one time). For the JavaScript version, I am only imagining it being used to validate a single file and if that entire file can't be held as an array of characters by the editor then I think there are bigger problems afoot!

So the complications around the "processorFactory" were skipped and the content was internally represented by a string that was the entire content. (Since the processor format expects a "string navigator" that reads a single character at a time, the JavaScript version has an equivalent object but internally this has a reference to the whole string, whereas the C# version did lots of work to deal with streams or any other enumerable source*).

* (If you have time to kill, I wrote a post last year.. well, more of an essay.. about how the C# code could access a TextReader through an immutable interface wrapper - internally an event was required on the implementation and if you've ever wanted to know the deep ins and outs of C#'s event system, how it can appear to cause memory leaks and what crazy hoops can be jumped through or avoided then you might enjoy it! See Auto-releasing Event Listeners).

Fast-forward a bit..

The actual details of the translating of the code aren't that interesting, it really was largely by rote with the biggest problem being concentrating hard enough that I didn't make silly mistakes. The optional second stage of processing - that takes categorised strings (Comment, StylePropertyName, etc..) and translates them into the hierarchical data that a LESS stylesheet describes - used bigger functions with messier logic, rather than the state machine of the first parsing phase, but it still wasn't particularly complicated and so the same approach to translation was used.

One thing I did quite get in to was making sure that I followed all of JSLint's recommendations, since Brackets highlights every rule that you break by default. I touched on JSLint last time (in JavaScript dependencies that work with Brackets, Node and in-browser) - I really like what Google did with Go in having a formatter that dictates how the code should be laid out and so having JSLint shout at me for having brackets on the wrong line meant that I stuck to a standard and didn't have to worry about it. I didn't inherently like having an "else" start on the same line as the closing brace of the previous condition, but if that's the way that everyone using JSLint (such as everyone following the Brackets Coding Conventions when writing extensions) then fair enough, I'll just get on with it!

Some of the rules I found quite odd, such as its hatred of "++", but then I've always found that one strange. According to the official site,

The ++ (increment) and -- (decrement) operators have been known to contribute to bad code by encouraging excessive trickiness

I presume that this refers to confusion between "i++" and "++i" but the extended version of "i++" may be used: "i = i + 1" or "i += 1". Alternatively, mutation of a loop counter can be avoided entirely with the use of "forEach" -

[1, 2, 3].forEach(function(element, index) {

This relies upon a level of compatibility when considering JavaScript in the browser (though ancient browsers can have this worked around with polyfills) but since I had a Brackets extension as the intended target, "forEach" seemed like the best way forward. It also meant that I could avoid the warning

Avoid use of the continue statement. It tends to obscure the control flow of the function.

by returning early from the enumeration function rather than continuing the loop (for cases where I wanted to use "continue" within a loop).

I think it's somewhat difficult to justify returning early within an inline function being more or less guilty of obscuring the control flow than a "continue" in a loop, but using "forEach" consistently avoided two warnings and reduced mutation of local variables which I think is a good thing since it reduces (even if only slightly) mental overhead when reading code.

At this point, I had code that would take style content such as

div.w1, div.w2 {

p {

strong, em { font-weight: bold; }

}

}

and parse it with

var result = CssParserJs.ExtendedLessParser.ParseIntoStructuredData(content);

into a structure

[{

"FragmentCategorisation": 3,

"Selectors": [ "div.w1", "div.w2" ],

"ParentSelectors": [],

"SourceLineIndex": 0,

"ChildFragments": [{

"FragmentCategorisation": 3,

"Selectors": [ "p" ],

"ParentSelectors": [ [ "div.w1", "div.w2" ] ],

"SourceLineIndex": 1,

"ChildFragments": [{

"FragmentCategorisation": 3,

"Selectors": [ "strong", "em" ],

"ParentSelectors": [ [ "div.w1", "div.w2" ], [ "p" ] ],

"ChildFragments": [{

"FragmentCategorisation": 4,

"Value": "font-weight",

"SourceLineIndex": 2

}, {

"FragmentCategorisation": 5,

"Property": {

"FragmentCategorisation": 4,

"Value": "font-weight",

"SourceLineIndex": 2

},

"Values": [ "bold" ],

"SourceLineIndex": 2

}],