A static type system is a wonderful message to the present and future - Supplementary

This is an extension of my post "A static type system is a wonderful message to the present and future. I initially rolled this all together into a single article but then decided to break it into two to make the first part easier to consume.

So, what else did I want to say? Rather than just saying "static typing is better", I want to express some more detailed "for" and "against" arguments. Spoiler alert: despite the negatives, I still believe that static typing is worth the effort.

FTW

I find that the more that I take advantage of the type system, the more reliable that my code becomes - not only in terms of how well it lasts over the years, but how likely that it is to work the first time that it compiles. Going back to some code that I wrote a few years ago, there are various parts of a particular project that deal with internationalisation - some parts want to know what language that particular content is in while some parts of more specific and want to know what language culture it's in; the difference between "English" (the language) and "English UK" / "en-GB" (the language culture). I wish now that, for that project, I'd created a type (in C#, a struct would have been the natural choice) to represent a LanguageKey and another for a LanguageCultureKey as I encountered several places where it was confusing which was required - some parts of the code had method arguments named "language" that wanted a language key while others had arguments named "language" that wanted a language culture key. The two parts of the project were written by different people at different times and, in both cases, it seemed natural to them to presume that "language" could mean a language key (since nothing more specific was required) or could mean a language culture (since they presumed that nothing less specific would ever be required). This is an example of a place where better argument naming would have helped because it would have been easier to spot if a language culture key was being passed where a language key was required. However, it would have been better again if the compiler would spot the wrong key type being passed - a human might miss it if a naming convention is relied upon, but the compiler will never miss an invalid type.

Another example that I've used in the past is that of React "props" validation - when creating React components (which are used to render DOM elements.. or OS components, if you're using React Native), you must provide specific information for the component; if it's a Label, for example, then you must provide a text string and maybe a class name string. If you're using JavaScript with React then you will probably be providing the props reference using simple object notation, so you will have to be careful that you remember that the text string is named "text" and not "labelText". The React library includes support for a "propTypes" object to be defined for a component - this performs validation at runtime, ensuring that required properties have values and that they are of the correct type. If a strongly-typed language (such as C#) was used to create and consume React components, then this additional runtime validation would not be required as the component's "props" class would be declared as a class and all properties would have the appropriate types specified there. These would be validated at compile time, rather than having to wait until runtime. Returning to the "Sharp Knives" quote, this may be construed as being validation written for "other programmers" - as in, "I don't want other programmers to try to use my component incorrectly" - but, again, I'm very happy to be the one of the "other programmers" in this case, it allows the type system to work as very-welcome documentation.

While we're talking about React and component props, the React library always treats the props reference for a component as being immutable. If the props data needs to change then the component needs to be re-rendered with a new props reference. If you are writing your application in JavaScript then you need to respect this convention. However, if you choose to write your React application in a strongly-typed language then you may have your props classes represented by immutable types. This enforces this convention through the type system - you (and anyone reviewing your code) don't have to keep a constant vigil against accidental mutations, the compiler will tell you if this is attempted (by refusing to build and pointing out where the mistake made).

The common thread, for me, in all of the reasons why static typing is a good thing is that it enforces things that I want (or that I require) to be enforced, while providing invaluable information and documentation through the types. This makes code easier to reason about and code that is easier to reason about is easier to maintain and extend.

What static typing can't solve

It's not a silver bullet. But, then, nothing is. Static typing is a valuable tool that should be used with automated test in order to create a reliable product.

To take a simple example that will illustrate a variety of principles, the following is a LINQ call made in C# to take a set of EmployeeDetails instances and determine the average age (we'll assume that EmployeeDetails is a class with an integer Age property) -

var averageAge = employees.Average(employee => employee.Age);

If we were implementing the "Average" function ourselves, then we would need to populate the following -

public static int Average<T>(this IEnumerable<T> source, Func<T, int> selector)

{

}

Static typing gives us a lot of clues here. It ensures that anyone calling "Average" has to provide a set of values that may be enumerated and they have to provide a lambda that extracts an integer from each of those values. If the caller tried to provide a lambda that extracted a string (eg. the employee's name) from the values then it wouldn't compile. The type signature documents many of the requirements of the method.

However, the type system does not ensure that the implementation of "Average" is correct. It would be entirely possible to write an "Average" function that returned the highest value, rather than the mean value.

This is what unit tests are for. Unit tests will ensure that the logic within a method is correct. It will ensure that 30 is returned from "Average" if a set of employees with ages 20, 30 and 40 are provided.

The type system ensures that the code is not called with inappropriate data. If you didn't have a static type system then it would still be possible to write more unit tests around the code that called "Average" to ensure that it was always dealing with appropriate data - but this is an entire class of tests that are not required if you leverage static analysis*.

Unfortunately, there are limitations to what may be expressed in the type system. In the "Average" example above, there is no way (in C#) to express the fact that it's invalid for a null "source" or "selector" reference to be passed or a "source" reference that has zero elements (since there is no such thing as an average value if there are zero values) or a set of items where one of more of the values is null. Any of these cases of bad data will result in a runtime error. However, I believe that the solution to this is not to run away screaming from static typing because it's not perfect - in fact, I think that the answer is more static analysis. Code Contracts is a way to include these additional requirements in the type system; to say that "source and selector may not be null" and "source may not be empty" and "source may not contain any null references". Again, this will be a way for someone consuming the code to have greater visibility of its requirements and for the compiler to enforce them. I will be able to write stricter code to stop other people from making mistakes with it, and other people will be able to write stricter code to make it clearer to me how it should be used and prevent me from making mistakes or trying to use it in ways that is not supported. I don't want the power to try to use code incorrectly.

I think that there are two other obvious ways that static typing can't help and protect you..

Firstly, when dealing with an external system there may be additional rules that you can not (and would not want to, for the sake of preventing duplication) describe in your code. Perhaps you have a data store that you pass updates to in order to persist changes made by a user - say the user wants to change the name of an employee in the application, so an UpdateEmployeeName action must be sent to the data service. This action will have an integer "Key" property and a string "Name" property. This class structure ensures that data of the appropriate form is provided but it can not ensure that the Key itself is valid - only the data store will know that. The type system is not an all-seeing-all-knowing magician, so it will allow some invalid states to be represented (such as an update action for an entity key that doesn't exist). But the more invalid states that may not be represented (such as not letting the key, which the data service requires to be an integer, be the string "abc" - for example) means that there are less possible errors states to test against and the code is more reliable (making it harder to write incorrect code will make the code more correct overall and hence more reliable).

Secondly, if the type system is not taken advantage to the fullest extent then it can't help you to the fullest extent. I have worked on code in the past where a single class was used in many places to represent variations on the same data. Sometimes a "Hotel" instance would describe the entity key, the name, the description. Sometimes the "Hotel" instance would contain detailed information about the rooms in the hotel, sometimes the "Rooms" property would be null. Sometimes it would have its "Address" value populated, other times it would be null. It would depend upon the type of request that the "Hotel" instance was returned for. This is a poor use of the type system - different response types should have been used, it should have been clear from the returned type what data would be present. The more often we're in a "sometimes this, sometimes that" situation, the less reliable that the code will be as it becomes easier to forget one of "sometimes" cases (again, I'm talking from personal experience and not just worrying about how this may or may not affect "other programmers"). Unfortunately, not even the potential for a strong type system can make shitty code good.

* (It's probably worth stating that a static type system is one way that tooling can automatically identify mistakes for you but it's not the only way - code contracts are a way to go beyond what C# can support "out of the box" but there are other approaches, such as what John Carmack has written about static analysis of C++ or how Facebook is analysing JavaScript without even requiring types to be explicitly declared)

Code Reviews

Another quote that stuck out for me in the "Sharp Knives" post was that

We enforce such good senses by convention, by nudges, and through education

This is very sensible advice. I think that one of the best ways for code quality to remain high is through developers working together - learning from each other and supporting each other. This is something that I've found code reviews to be very effective for. If all code is reviewed, then all code is guaranteed to have been read by at least two people; the author and the reviewer. If the code is perfect, then that's where the review ends - on a high note. If the code needs work then any mistakes or improvements can be highlighted and addressed. As the recipient of a review that identifies a mistake that I've made, I'm happy! Well.. I'm generally a bit annoyed with myself for making the mistake but I'm glad that a colleague has identified it rather than it getting to an end user.

As a code reviewer, I will be happy with code that I think requires no changes or if code needs to be rejected only once. I've found that code that is rejected and then fixed up is much harder to re-review and that bugs more often slip through the re-review process. It's similar to the way in which you can more easily become blind to bugs in code that you've just written than you are to someone else's code - you have a familarity with the code that you are reviewing for a second time and someone has just told you that they have fixed it; I've found that there is something psychological about that that makes it just that little bit harder to pick up on any subsequent mistakes. Thusly, I would prefer to limit the number of times that reviews bounce back and forth.

I have found that a static type system encourages a stricter structure on the code and that conventions are clearer, not to mention the fact that the compiler can identify more issues - meaning that there should be fewer types of mistake that can get through to a review. There is, of course, a limit to what the type system can contribute on this front but any mechanical checks that a computer could perform leave the reviewer more time (and mental capacity) to provide deeper insight; to offer guidance to a more junior developer or to suggest implementation tweaks to a peer.

A "wonderful message"

It's a theme that has picked up more and more weight for me over the years, that the computer should be able to help me tell it what to do - I should be able to leverage its strengths in order to multiply mine. As a developer, there is a lot of creativity required but also a huge quantity of seemingly banal details. The strength of a good abstraction comes from being able to "hide away" details that don't matter, leaving you with larger and more useful shapes to deal with, allowing you to think closer to the big picture. The more details that may be automatically verified, the less that you need to worry about them; freeing up more valuable mental space. Leaning on static analysis aids this, it allows the computer to do what it's good at and concentrate on the simple-and-boring rules, allowing you to become more effective. It's an incredibly powerful tool, the ability to actually limit certain things from being done allows you to do more of what you should be doing.

It can also be an invaluable form of documentation for people using your code (including you, in six months, when you've forgotten the finer details). Good use of the type system allows for the requirements and the intent of code to be clearer. It's not just a way of communicating with the compiler, it's also a very helpful way to communicate with human consumers of your code.

On a personal note, this marks my 100th post on this blog. The first (I love Immutable Data) was written about five years ago and was also (basically) about leveraging the type system - by defining immutable types and the benefits that they could have. I find it reassuring that, with all that I've seen since then (and thinking back over the time since I first started writing code.. over 25 years ago) that this still feels like a good thing. In a time where it seems like everyone's crying about JavaScript fatigue (and the frequent off-the-cuff comments about React being "so hot right now"*), I'm glad that there are still plenty of principles that stand the test of time.

* (Since I'm feeling so brave and self-assured, I'm going to say that I think that React *will* still be important five years from now - maybe I'll look back in 2021 and see how this statement has fared!)

Posted at 21:34

A static type system is a wonderful message to the present and future

Last week, I read the article "My time with Rails is up" (by Piotr Solnica) which resulted in me reading some of DHH's latest posts and re-reading some of his older ones.

Some people write articles that I enjoy reading because they have similar ideas and feelings about development that I do, that they manage to express in a new or particularly articulate way that helps me clarify it in my own head or that helps me think about whether I really do still agree with the principle. Some people write articles that come from a completely different point of view and experience to me and these also can have a lot of benefit, in that they make me reconsider where I stand on things or inspire me to try something different to see how it feels. DHH is, almost without fail, interesting to read and I like his passion and conviction.. but he's definitely not in that first category of author. There was one thing in particular, though, that really stuck out for me in his post "Provide sharp knives" -

Ruby includes a lot of sharp knives in its drawer of features.. The most famous is monkey patching: The power to change existing classes and methods. .. it offered a different and radical perspective on the role of the programmer: That they could be trusted with sharp knives. .. That’s an incredibly aspirational idea, and one that runs counter to a lot of programmer’s intuition about other programmers.

Because it’s always about other programmers when the value of sharp knives is contested. I’ve yet to hear a single programmer put up their hand and say "I can’t trust myself with this power, please take it away from me!". It’s always "I think other programmers would abuse this"

The highlighted section of the quote is what I disagree with most - because I absolutely do want to be able to write code in a way that limits how I (as well as others) may use it.

And I strongly disagree with it because..

The harsh reality is that all code is created according to a particular set of limitations and compromises that are present at the time of writing. The more complex the task that the code must perform, the more likely that there will be important assumptions that are "baked into" the code and that it would be beneficial for someone using the code to be aware of. A good type system can be an excellent way to communicate some of these assumptions. And, unlike documentation, convention or code review, a good type system can allow these assumptions to be enforced by the computer - rather than a principle that should be treated as unbreakable being allowed to be ignored. Computers are excellent at verifying simple sets of rules, which allow them to help identify common mistakes (or miscomprehensions).

At the very simplest level, specifying types for a method's arguments makes it much less likely that I'll refactor my code by swapping two of the arguments and then miss one of the call sites and not find out that something now fails until runtime (the example sounds contrived but, unfortunately, it is something that I've done from time to time). Another simple example is that having descriptive classes reminds me precisely what the minimum requirements are for a method without having to poke around inside the method - if there is an argument named "employeeSummaries" in a language without type annotations, I can presume that it's some sort of collection type.. but should each value in the collection include just the key and name of each employee or should it be key, name and some other information that the method requires such as, say, a list of reporting employees that the employee is responsible for managing? With a type system, if the argument is IEnumerable<EmployeeSummary> then I can see what information I have to provide by looking at the EmployeeSummary class.

A more complex example might involve data that is shared across multiple threads, whether for parallel processing or just for caching. The simplest way to write this sort of code reliably is to prevent mutation of the data from occurring on multiple threads and one way to achieve that is for the data to be represented by immutable data types. If the multi-threaded code requires that the data passed in be immutable then it's hugely beneficial for the type system to be able to specify that immutable types be used, so that the internal code may be written in the simplest way - based on the requirement that the data not be mutable.

I want to reinforce here that this is not just about me trying to stop other people from messing up when they use my code, this is just as much about me. Being able to represent these sorts of key decisions in the type system means that I can actually be a little bit less obsessive with how much I worry about them, easing the mental burden. This, in turn, leaves me more mental space to concentrate on solving the real problem at hand. I won't be able to forget to pass data in an immutable form to methods that require it in an immutable form, because the compiler won't let me do so.

Isn't this what automated tests are for?

An obvious rebuttal is that these sorts of errors (particular the mixing-up-the-method-arguments example) can (and should) be caught by unit tests.

In my opinion: no.

I believe that unit tests are required to test logic in an application and it is possible to write unit tests that show how methods work when given the correct data and that show how they will fail when given invalid data but it's difficult (and arduous) to prove, using automated tests, that the same guarantees that a type system could enforce are not being broken anywhere in your code. The only-allow-immutable-data-types-to-be-passed-into-this-thread-safe-method example is a good one here since multi-threaded code will often appear to work fine when only executed within a single thread, meaning that errors will only surface when multiple threads are working with it simultaneously. Writing unit tests to try to detect race conditions is not fun. You could have 100% code coverage and not always pick up on all of the horrible things that can happen when multiple threads deal with mutable data. If the data passed around within those code paths is immutable, though (which may be enforced through the types passed around), then these potential races are prevented.

Good use of static typing means that an entire class of unit tests are not required.

The fact that static typing is not enough to confirm that your code is correct, and that unit tests should be written as well, does not mean that only units tests should be used.

I've kept this post deliberately short because I would love for it to have some impact and experience has taught me that it's much more difficult for that to be the case with a long format post. I've expanded on this further at "A static type system is a wonderful message to the present and future - Supplementary". There's more about the benefits, more about the limitations, more examples of me saying "I don't want the power to do try to do something that this code has been explicitly written not to have to deal with" and no more mentions of multi-threading because static typing's benefits are not restricted to especially complicated problem domains, applications may benefit regardless of their complexity.

Posted at 21:33

Using Roslyn code fixes to make the "Friction-less immutable objects in Bridge" even easier

This is going to be a short post about a Roslyn (or "The .NET Compiler Platform", if you're from Microsoft) analyser and code fix that I've added to a library. I'm not going to try to take you through the steps required to create an analyser nor how the Roslyn object model describes the code that you've written in the IDE* but I want to talk about the analyser itself because it's going to be very useful if you're one of the few people using my ProductiveRage.Immutable library. Also, I feel like the inclusion of analysers with libraries is something that's going to become increasingly common (and I want to be able to have something to refer back to if I get the chance to say "told you!" in the future).

* (This is largely because I'm still struggling with it a bit myself; my current process is to start with Use Roslyn to Write a Live Code Analyzer for Your API and the "Analyzer with Code Fix (NuGet + VSIX)" Visual Studio template. I then tinker around a bit and try running what I've got so far, so that I can use the "Syntax Visualizer" in the Visual Studio instance that is being debugged. Then I tend to do a lot of Google searches when I feel like I'm getting close to something useful.. how do I tell if a FieldDeclarationSyntax is for a readonly field or not? Oh, good, someone else has already written some code doing something like what I want to do - I look at the "Modifiers" property on the FieldDeclarationSyntax instance).

As new .net libraries get written, some of them will have guidelines and rules that can't easily be described through the type system. In the past, the only option for such rules would be to try to ensure that the documentation (whether this be the project README and / or more in-depth online docs and / or the xml summary comment documentation for the types, methods, properties and fields that intellisense can bring to your attention in the IDE). The support that Visual Studio 2015 introduced for customs analysers* allows these rules to be communicated in a different manner.

* (I'm being English and stubborn, hence my use of "analysers" rather than "analyzers")

In short, they allow these library-specific guidelines and rules to be highlighted in the Visual Studio Error List, just like any error or warning raised by Visual Studio itself (even refusing to allow the project to be built, if an error-level message is recorded).

An excellent example that I've seen recently was encountered when I was writing some of my own analyser code. To do this, you can start with the "Analyzer with Code Fix (NuGet + VSIX)" template, which pulls in a range of NuGet packages and includes some template code of its own. You then need to write a class that is derived from DiagnosticAnalyzer. Your class will declare one of more DiagnosticDescriptor instances - each will be a particular rule that is checked. You then override an "Initialize" method, which allows your code to register for syntax changes and to raise any rules that have been broken. You must also override a "SupportedDiagnostics" property and return the set of DiagnosticDescriptor instances (ie. rules) that your analyser will cover. If the code that the "Initialize" method hooks up tries to raise a rule that "SupportedDiagnostics" did not declare, the rule will be ignored by the analysis engine. This would be a kind of (silent) runtime failure and it's something that is documented - but it's still a very easy mistake to make; you might create a new DiagnosticDescriptor instance and raise it from your "Initialize" method but forget to add it to the "SupportedDiagnostics" set.. whoops. In the past, you may not realise until runtime that you'd made a mistake and, as a silent failure, you might end up getting very frustrated and be stuck wondering what had gone wrong. But, mercifully (and I say this as I made this very mistake), there is an analyser in the "Microsoft.CodeAnalysis.CSharp" NuGet package that brings this error immediately to your attention with the message:

RS1005 ReportDiagnostic invoked with an unsupported DiagnosticDescriptor

The entry in the Error List links straight to the code that called "context.ReportDiagnostic" with the unexpected rule. This is fantastic - instead of suffering a runtime failure, you are informed at compile time precisely what the problem is. Compile time is always better than run time (for many reasons - it's more immediate, so you don't have to wait until runtime, and it's more thorough; a runtime failure may only happen if a particular code path is followed, but static analysis such as this is like having every possible code path tested).

The analysers already in ProductiveRage.Immutable

The ProductiveRage uber-fans (who, surely exist.. yes? ..no? :D) may be thinking "doesn't the ProductiveRage.Immutable library already have some analysers built into it?"

And they would be correct, for some time now it has included a few analysers that try to prevent some simple mistakes. As a quick reminder, the premise of the library is that it will make creating immutable types in Bridge.NET easier.

Instead of writing something like this:

public sealed class EmployeeDetails

{

public EmployeeDetails(PersonId id, NameDetails name)

{

if (id == null)

throw new ArgumentNullException("id");

if (name == null)

throw new ArgumentNullException("name");

Id = id;

Name = name;

}

/// <summary>

/// This will never be null

/// </summary>

public PersonId Id { get; }

/// <summary>

/// This will never be null

/// </summary>

public NameDetails Name { get; }

public EmployeeDetails WithId(PersonId id)

{

return Id.Equals(id) ? this : return new EmployeeDetails(id, Name);

}

public EmployeeDetails WithName(NameDetails name)

{

return Name.Equals(name) ? this : return new EmployeeDetails(Id, name);

}

}

.. you can express it just as:

public sealed class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(PersonId id, NameDetails name)

{

this.CtorSet(_ => _.Id, id);

this.CtorSet(_ => _.Name, name);

}

public PersonId Id { get; }

public NameDetails Name { get; }

}

The if-null-then-throw validation is encapsulated in the CtorSet call (since the library takes the view that no value should ever be null - it introduces an Optional struct so that you can identify properties that may be without a value). And it saves you from having to write "With" methods for the updates as IAmImmutable implementations may use the "With" extension method whenever you want to create a new instance with an altered property - eg.

var updatedEmployee = employee.With(_ => _.Name, newName);

The library can only work if certain conditions are met. For example, every property must have a getter and a setter - otherwise, the "CtorSet" extension method won't know how to actually set the value "under the hood" when populating the initial instance (nor would the "With" method know how to set the value on the new instance that it would create).

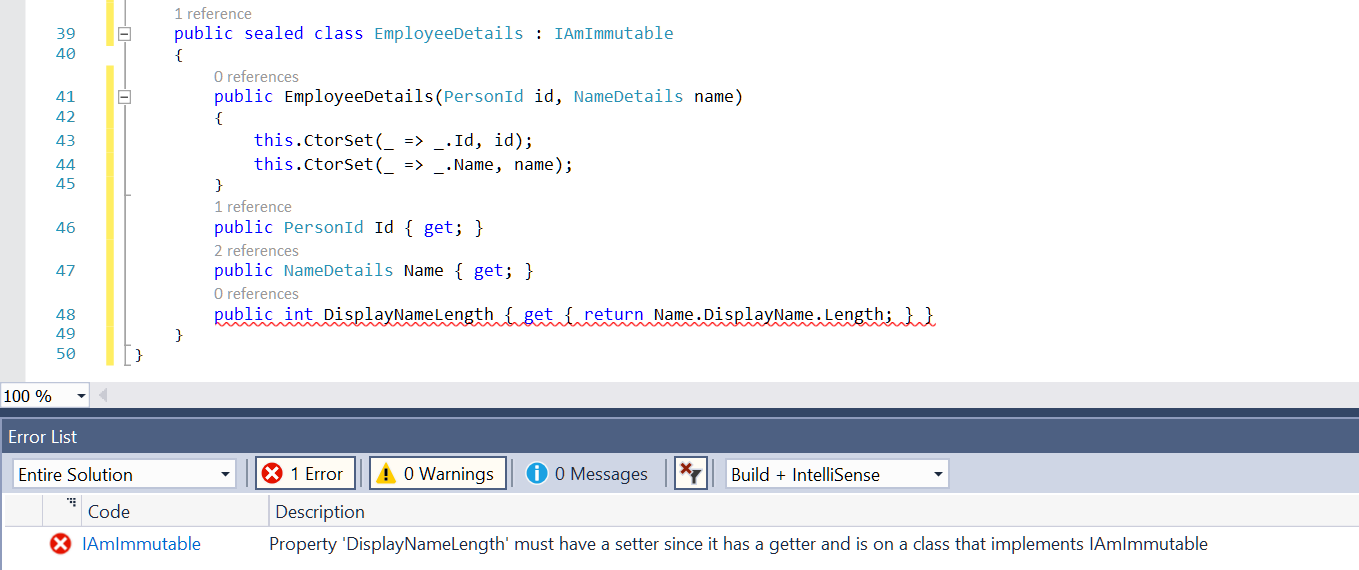

If you forgot this and wrote the following (note the "DisplayNameLength" property that is now effectively a computed value and there would be no way for us to directly set it via a "With" call) -

public sealed class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(PersonId id, NameDetails name)

{

this.CtorSet(_ => _.Id, id);

this.CtorSet(_ => _.Name, name);

}

public PersonId Id { get; }

public NameDetails Name { get; }

public int DisplayNameLength { get { return Name.DisplayName.Length; } }

}

.. then you would see the following errors reported by Visual Studio (presuming you are using 2015 or later) -

.. which is one of the "common IAmImmutable mistakes" analysers identifying the problem for you.

Getting Visual Studio to write code for you, using code fixes

I've been writing more code with this library and I'm still, largely, happy with it. Making the move to assuming never-allow-null (which is baked into the "CtorSet" and "With" calls) means that the classes that I'm writing are a lot shorter and that type signatures are more descriptive. (I wrote about all this in my post at the end of last year "Friction-less immutable objects in Bridge (C# / JavaScript) applications" if you're curious for more details).

However.. I still don't really like typing out as much code for each class as I have to. Each class has to repeat the property names four times - once in the constructor, twice in the "CtorSet" call and a fourth time in the public property. Similarly, the type name has to be repeated twice - once in the constructor and once in the property.

This is better than the obvious alternative, which is to not bother with immutable types. I will gladly take the extra lines of code (and the effort required to write them) to get the additional confidence that a "stronger" type system offers - I wrote about this recently in my "Writing React with Bridge.NET - The Dan Way" posts; I think that it's really worthwhile to bake assumptions into the type system where possible. For example, the Props types of React components are assumed, by the React library, to be immutable - so having them defined as immutable types represents this requirement in the type system. If the Props types are mutable then it would be possible to write code that tries to change that data and then bad things could happen (you're doing something that library expects not to happen). If the Props types are immutable then it's not even possible to write this particular kind of bad-things-might-happen code, which is a positive thing.

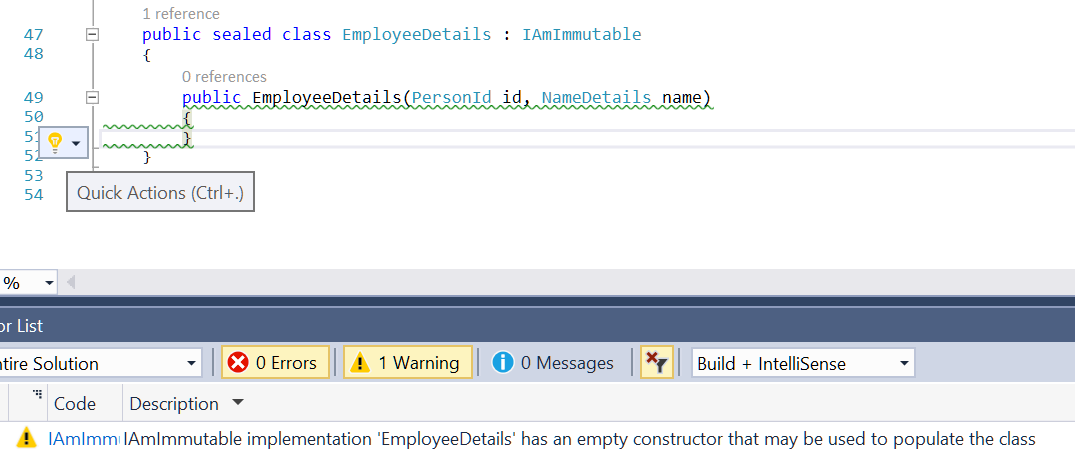

But still I get a niggling feeling that things could be better. And now they are! With Roslyn, you can not only identify particular patterns but you can also offer automatic fixes for them. So, if you were to start writing the EmployeeDetails class from scratch and got this far:

public sealed class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(PersonId id, NameDetails name)

{

}

}

.. then an analyser could identify that you were writing an IAmImmutable implementation and that you have an empty constructor - it could then offer to fix that for you by filling in the rest of the class.

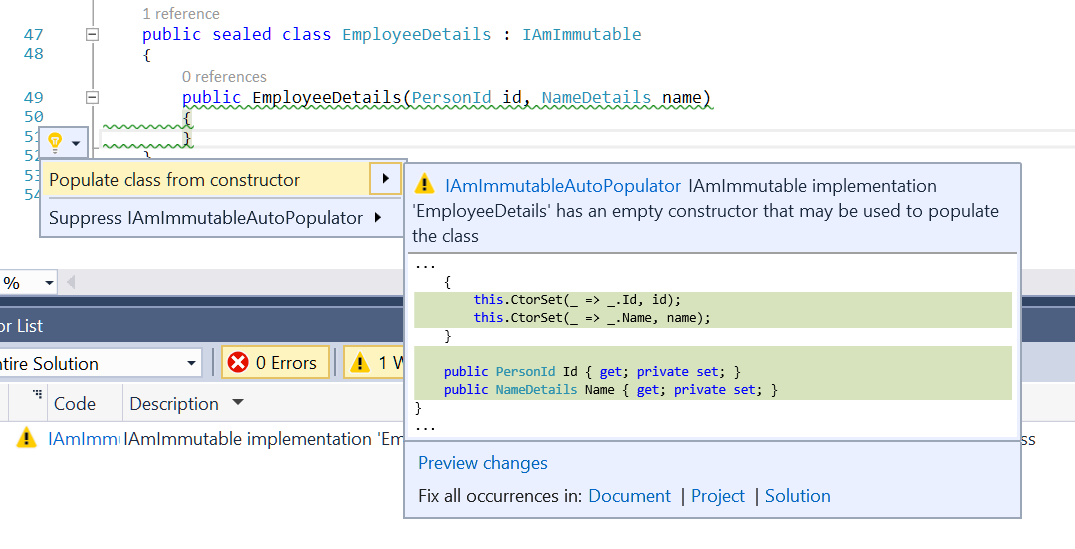

The latest version of the ProductiveRage.Immutable library (1.7.0) does just that. The empty constructor will not only be identified with a warning but a light bulb will also appear alongside the code. Clicking this (or pressing [Ctrl]-[.] while within the empty constructor, for fellow keyboard junkies) will present an option to "Populate class from constructor" -

Selecting the "Populate class from constructor" option -

.. will take the constructor arguments and generate the "CtorSet" calls and the public properties automatically. Now you can have all of the safety of the immutable type with no more typing effort than the mutable version!

// This is what you have to type of the immutable version,

// then the code fix will expand it for you

public sealed class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(PersonId id, NameDetails name)

{

}

}

// This is what you would have typed if you were feeling

// lazy and creating mutable types because you couldn't

// be bothered with the typing overhead of immutability

public sealed class EmployeeDetails

{

public PersonId Id;

public NameDetails name;

}

To summarise

If you're already using the library, then all you need to do to start taking advantage of this code fix is update your NuGet reference* (presuming that you're using VS 2015 - analysers weren't supported in previous versions of Visual Studio).

* (Sometimes you have to restart Visual Studio after updating, you will know that this is the case if you get a warning in the Error List about Visual Studio not being able to load the Productive.Immutable analyser)

If you're writing your own library that has any guidelines or common gotchas that you have to describe in documentation somewhere (that the users of your library may well not read unless they have a problem - at which point they may even abandon the library, if they're only having an investigative play around with it) then I highly recommend that you consider using analysers to surface some of these assumptions and best practices. While I'm aware that I've not offered much concrete advice on how to write these analysers, the reason is that I'm still very much a beginner at it - but that puts me in a good position to be able to say that it really is fairly easy if you read a few articles about it (such as Use Roslyn to Write a Live Code Analyzer for Your API) and then just get stuck in. With some judicious Google'ing, you'll be making progress in no time!

I guess that only time will tell whether library-specific analysers become as prevalent as I imagine. It's very possible that I'm biased because I'm such a believer in static analysis. Let's wait and see*!

* Unless YOU are a library writer that this might apply to - in which case, make it happen rather than just sitting back to see what MIGHT happen! :)

Posted at 22:33

About

Dan is a big geek who likes making stuff with computers! He can be quite outspoken so clearly needs a blog :)

In the last few minutes he seems to have taken to referring to himself in the third person. He's quite enjoying it.

Recent Posts

- Hosting a DigitalOcean App Platform app on a custom subdomain (with CORS)

- (Approximately) correcting perspective with C# (fixing a blurry presentation video - part two)

- Finding the brightest area in an image with C# (fixing a blurry presentation video - part one)

- So.. what is machine learning? (#NoCodeIntro)

- Parallelising (LINQ) work in C#

Highlights

- Face or no face (finding faces in photos using C# and Accord.NET)

- When a disk cache performs better than an in-memory cache (befriending the .NET GC)

- Performance tuning a Bridge.NET / React app

- Creating a C# ("Roslyn") Analyser - For beginners by a beginner

- Translating VBScript into C#

- Entity Framework projections to Immutable Types (IEnumerable vs IQueryable)

Archives

- April 2025 (1)

- March 2022 (2)

- February 2022 (1)

- August 2021 (1)

- April 2021 (2)

- March 2021 (1)

- August 2020 (3)

- July 2019 (2)

- September 2018 (1)

- April 2018 (1)

- March 2018 (1)

- July 2017 (1)

- June 2017 (1)

- February 2017 (1)

- November 2016 (1)

- September 2016 (2)

- August 2016 (1)

- July 2016 (1)

- June 2016 (1)

- May 2016 (3)

- March 2016 (3)

- February 2016 (2)

- December 2015 (1)

- November 2015 (2)

- August 2015 (3)

- July 2015 (1)

- June 2015 (1)

- May 2015 (2)

- April 2015 (1)

- March 2015 (1)

- January 2015 (2)

- December 2014 (1)

- November 2014 (1)

- October 2014 (2)

- September 2014 (2)

- August 2014 (1)

- July 2014 (1)

- June 2014 (1)

- May 2014 (2)

- February 2014 (1)

- January 2014 (1)

- December 2013 (1)

- November 2013 (1)

- October 2013 (1)

- August 2013 (3)

- July 2013 (3)

- June 2013 (1)

- May 2013 (2)

- April 2013 (1)

- March 2013 (8)

- February 2013 (2)

- January 2013 (2)

- December 2012 (3)

- November 2012 (4)

- September 2012 (1)

- August 2012 (1)

- July 2012 (3)

- June 2012 (3)

- May 2012 (2)

- February 2012 (3)

- January 2012 (4)

- December 2011 (7)

- August 2011 (2)

- July 2011 (1)

- May 2011 (1)

- April 2011 (2)

- March 2011 (3)