Cross Browser (Pseudo) Source Mapping with LESS

I have a component that is set up to load LESS stylesheets with what is essentially

var styleSheetLoader = new CachingLoader(

new MinifyingLoader(

new DotLessCompilingLoader(

new ImportFlatteningLoader(

new FromDiskLoader()

)

)

)

);

This works great in terms of efficient delivery of content; the LESS styles are compiled into vanilla CSS, the ImportFlatteningLoader inserts referenced content in place of @import statements to minimise http requests so long as the referenced files are all in the same folder. This same-folder restriction allows the CachingLoader to compare a cached-entry's-last-modified date against the most-recently-modified-date-of-any-file-in-the-folder to see if the cached data should be expired, layering on a time-based-expiration cache of a few seconds so that during periods of high traffic disk access is constrained.

Side note: Since dotLess can deal with imports it might seem a bit strange that I have ImportFlatteningLoader and FromDiskLoader references in there but that's largely because the component is based on what I wrote about last year; On-the-fly CSS Minification. I just shoved the dotLess processor into the chain.

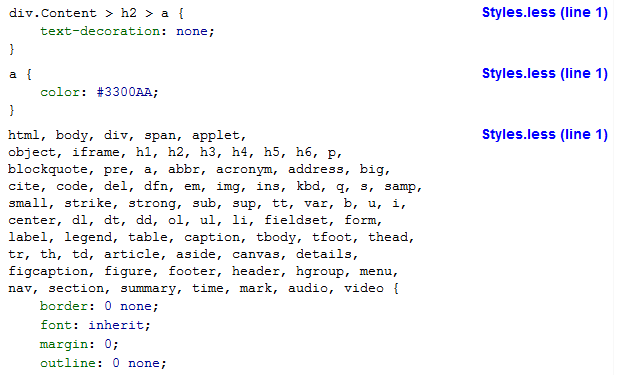

The problem is that when I'm editing styles and relying on web developer tools, everything appears to be in line 1 of "style.less"

The way that I've tried to address this is with a "SourceMappingMarkerInjectingLoader" and an "InjectedIdTidyingLoader". The former will push ids into selectors that indicate where the styles originated in the source - eg. "Content.less_123" (meaning line 123 in the file "Content.less") whilst the latter will tidy up any unnecessary styles that are the result of the LESS compilation.

If, for example, one of the imported stylesheets has the filename "test.less" and the content

a.test

{

color: #00f;

&:hover { color: #00a; }

}

then the SourceMappingMarkerInjectingLoader will rewrite this as

#test.less_1, a.test

{

color: #00f;

#test.less_4, &:hover { color: #00a; }

}

but when the LESS processing has been applied, this will become

#test.less_1,a.test{color:#00f}

#test.less_1 #test.less_4,#test.less_1:hover,a.test #test.less_4,a.test:hover{color:#00a}

On that second line, the fourth selector ("a.test:hover") is the only one that has any direct use; it is what the original source would have been compiled to. The first three selectors ("#test.less_1 #test.less_4", "#test.less_1:hover" and "a.test #test.less_4") are not of direct use but the selector element "#test.less_4" is useful since it indicates where in the source that the original selector originated. So most of the content in those first three selectors can be discarded and replaced only with "#test.less_4".

This is what the InjectedIdTidyingLoader is for. If the component is initialised with

var styleSheetLoader = new CachingLoader(

new MinifyingLoader(

new InjectedIdTidyingLoader(

new DotLessCompilingLoader(

new ImportFlatteningLoader(

new SourceMappingMarkerInjectingLoader(

new FromDiskLoader()

)

)

)

)

)

);

then the web dev tools show something more like

Much more useful! Each style block still shows "Styles.less (line 1)" in big bold text, but each selector set includes ids to indicate the source filename and line number. While this content will bloat the uncompressed file size of the generated CSS, the filenames will likely be duplicated over and over, which lends itself very well to gzip'ing. (You can inspect the styles on this blog for an example of this process in action).

Performance problems

There is a problem, though. When the LESS source files start to get large and - more significantly - increasingly deeply-nested, the content that the DotLessCompilingLoader generates from the nested "Source Mapping Marker Ids" balloons. In one case, I had 116kb (which, granted, is a lot of rules) explode past 6mb. That's a huge amount of CSS that needs parsing and unnecessary selectors trimming from.

Incidentally, the size of the delivered CSS (with "tidied" markers ids) was 119kb, an overhead of 17%. When gzip'd, the content without marker ids was 15.6kb while the content with marker ids was 18.9kb, an overhead of 20%.

Is this necessary at all?

As an aside, I expect that one day we will have well-integrated cross-browser Source Mapping support that will make these injected markers unnecessary but it still seems to be early days on this front. It seems like the compile-to-JavaScript languages are making a lot of use of the Source Mapping support that some browsers have (CoffeeScript, for example) but for LESS it seems a lot patchier (for the .net component, anyway; less.js has this). SASS seems better still (see Debugging SASS with Source Maps).

But these solutions still need browser support. The recent builds of Chrome and Firefox will be fine. But with IE, even with the just-released IE11, you're going to be out of luck.

So while these "Source Mapping Marker Ids" add overhead to the delivered content (and processing overhead, but I'm about to talk about improving that significantly) they do at least work across all browsers.

Addressing the performance problems

My last post was about my first stabs at optimising the id-tidying process (see Optimising the CSS Processor - ANTS and algorithms). I made some good strides but I wasn't happy with all of the approaches that I took and it still didn't perform as well as I would have liked with source files that contained many deeply-nested selectors.

If the problem I was trying to solve was that the LESS compiler was emitting too much content, maybe what I really needed to do was work with it rather than tidying up after it. The code is on GitHub so I figured I'd dive in and see what I could find!

dotLess Plugins

After downloading the code and building it locally, I found the "Plugins" folder under dotLess / src / dotLess.Core. Seeing this was an indication that the author had developed the project with a view to making it extensible without having to change its own source.

Searching for "dotLess plugins" will first lead you to people writing "function plugins" (a way to declare new functions that the parser will process as if they had been built into the core system) but digging deeper there are mentions of "visitor plugins". I found this article very useful: The world of LESS. The phrase "visitor plugins" refers to the Visitor Design Pattern. In terms of dotLess, it allows you to intercept every instantiation of a LESS structure and either allow it through or replace it with something of your own. You can do this either before or after "evaluation" (where LESS mixins and values are replaced with CSS styles and nested selectors are flattened).

What I wanted to do was write a visitor plugin that would take post-evaluation content and rewrite Ruleset instances whose selector sets needed tidying.

A post-evaluation Ruleset is essentially a set of selectors (such as "#test.less_1 #test.less_4, #test.less_1:hover, a.test #test.less_4, a.test:hover") and a set of rules (such as "color: #00a;").

So I want to grab these Ruleset instances and replace them with instances whose selector sets have been tidied where necessary. So "#test.less_1 #test.less_4, #test.less_1:hover, a.test #test.less_4, a.test:hover" will become "#test.less_4, a.test:hover".

Digging further through the code, it turns out that there are some types that inherit from Ruleset that shouldn't be messed with, such as the top-level "Root" type. So the plugin will need to target specific Ruleset types, not just any instances that inherits it.

So what I come up with is

private class SelectorRewriterVisitorPlugin : VisitorPlugin

{

private readonly InsertedMarkerRetriever _markerIdRetriever;

public SelectorRewriterVisitorPlugin(InsertedMarkerRetriever markerIdRetriever)

{

if (markerIdRetriever == null)

throw new ArgumentNullException("markerIdRetriever");

_markerIdRetriever = markerIdRetriever;

}

public override VisitorPluginType AppliesTo

{

get { return VisitorPluginType.AfterEvaluation; }

}

public override Node Execute(Node node, out bool visitDeeper)

{

visitDeeper = true;

if (node.GetType() == typeof(Ruleset))

{

var ruleset = (Ruleset)node;

if (ruleset != null)

{

return new MarkerIdTidyingRuleset(ruleset.Selectors, ruleset.Rules, _markerIdRetriever)

{

Location = ruleset.Location

};

}

}

return node;

}

}

/// <summary>

/// This should never return null, nor a set containing any null or blank entries - all markers

/// should be of the format "#id.class"

/// </summary>

public delegate IEnumerable<string> InsertedMarkerRetriever();

The MarkerIdTidyingRuleset is a class that inherits from Ruleset and rewrites its own selectors to remove the ones it doesn't need. The code isn't particularly complex or innovative, but it's too long to include here. It in the CSSMinifier project, though, so if you want to see it then you can find it on Bitbucket here (it's a nested class of the DotLessCssCssLoader so it's in that linked file somewhere!).

The VisitorPlugin class, that the SelectorRewriterVisitorPlugin inherits, is in the dotLess source and makes writing visitor plugins easy.

The only part that isn't as easy is registering the plugin. There isn't a collection that you can add an IPlugin implementation directly to, but LessEngine instances have a "Plugins" set whose elements are of type IPluginConfigurator - these are classes that know how to instantiate particular plugins.

So I had to write:

private class SelectorRewriterVisitorPluginConfigurator : IPluginConfigurator

{

private readonly InsertedMarkerRetriever _markerIdRetriever;

public SelectorRewriterVisitorPluginConfigurator(InsertedMarkerRetriever markerIdRetriever)

{

if (markerIdRetriever == null)

throw new ArgumentNullException("markerIdRetriever");

_markerIdRetriever = markerIdRetriever;

}

public IPlugin CreatePlugin() { return new SelectorRewriterVisitorPlugin(_markerIdRetriever); }

public IEnumerable<IPluginParameter> GetParameters() { return new IPluginParameter[0]; }

public void SetParameterValues(IEnumerable<IPluginParameter> parameters) { }

public string Name { get { return "SelectorRewriterVisitorPluginConfigurator"; } }

public string Description { get { return Name; } }

public Type Configurates { get { return typeof(SelectorRewriterVisitorPlugin); } }

}

and then instantiate a LessEngine with

var engine = new LessEngine();

engine.Plugins = new[] {

new SelectorRewriterVisitorPluginConfigurator(_markerIdRetriever)

};

WINNING!

Since I started writing this article, a big project at work has used this component and the final size of the combined output is over 200kb. I said earlier that 116kb of minified content is a lot of styles, well this clearly tops that! In fairness, it's a large and complex site and it's chock full of responsive goodness to make it render beautifully on mobiles tiny and large, tablets and desktop.

Before the id-tidying was handled with a dotLess visitor plugin (where there was an entirely separate processing step to tidy up the unnecessary marker-id selectors) the build process was taking almost 20 seconds. Not acceptable. With the visitor plugin approach, this is now just over 3 seconds. Much more palatable. And, like I found in the last post, it's another example of how changing the algorithm can sometimes have dramatic improvements over trying to micro-optimise the current approach. Or, perhaps, a reminder that the quickest way to do something might be not to do it!

Show me the code..

If you want to get at the code, it's all on Bitbucket: The CSSMinifier. There's a "CSSMinifierDemo" (ASP.net MVC) project in there that has a CSSController class that import-flattens, injects pseudo-source-mapping marker ids, compiles LESS down to vanilla CSS, minifies, caches to memory and disk (invalidating when source files change), deals with 304s and with supporting gzip'ing responses.

The primary project that utilises this at work doesn't use ASP.net but I do use MVC for this blog and it also seemed like a natural way to construct a full demonstration.

Mild disclaimer

I've become a bit of a dotLess advocate over the last year or so and dipping into the code here coincided with a colleague at work complaining about dotLess not working with Bootstrap 3. Finding the code approachable (and not being happy with this bad-mouthing I was hearing of my beloved dotLess!), I've fixed most of the problems and had pull requests merged into the master repository. And now a NuGet package is available (see dotless v1.4 released). This has been my first foray into contributing to an open source project and, especially considering some of the stories I've heard about people being ignored or rejected, it's been an absolute joy. I might have to look for more projects that I care about that I can help!

Posted at 22:41

Optimising the CSS Processor (ANTS and algorithms)

The Production Team at work have started using my best-practices-rules-validating LESS CSS Processor (which utilises dotLess). This is excellent news for me! And frankly, I think it's excellent news for them! :D

This all ties in with a post I wrote at the start of the year (Non-cascading CSS: A revolution!), a set of rules to write maintainable and genuinely reusable stylesheets. I gave a presentation on it at work and one of the Lead Devs on Production recently built the first site using the processor (I've used it for this blog but the styling here is hardly the most complex). Now that it's been proven, it's being used on another build. And a high-profile one, at that. Like I said, exciting times!

However, during the site-build process there are periods where the routine goes tweak styles, refresh, tweak styles, refresh, tweak styles, lather, rinse, repeat. And when the full set of stylesheets starts getting large, the time to regenerate the final output (and perform all of the other processing) was getting greater and making this process painful.

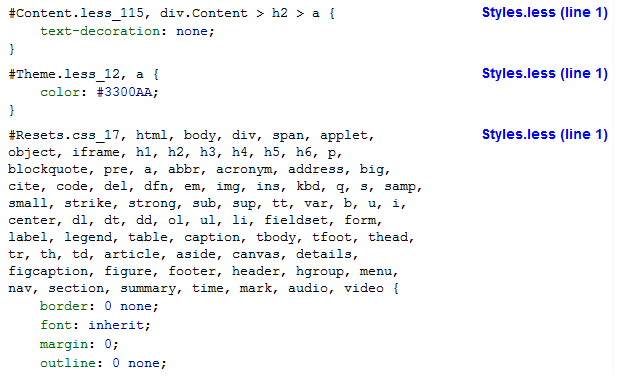

The good news is that now that the project is being used in work, I can use some of work's resources and toys to look into this. We allocated a little time this sprint to profile the component and see if there are any obvious bottlenecks that can be removed. The golden rule is you don't optimise until you have profiled. Since we use ANTS Performance Profiler at work, this seemed like an excellent place to start.

March of the ANTS

The ANTS Performance Profile is a great bit of kit for this sort of thing. I prepared an executable to test that would compile stylesheets from the new site in its current state. This executable had a dependency on the processor code - the idea is I choose something to optimise, rebuild the processor, rebuild the test executable and re-run the profiler.

Things take much longer to run in the profiler than they would otherwise (which is to be expected, the profiler will be tracking all sorts of metrics as the code runs) but performance improvements should mean that the execution time decreases on subsequent runs. And, correspondingly, the component will run more quickly when not in the profiler, which is the whole point of the exercise!

So I point the profiler at the executable and the results look like the following..

(I shrank the screenshot to get it to fit on the page properly, if you can't read it then don't worry, I'm about to explain the highlights).

What we're looking at is a drilling-down of the most expensive methods. I've changed the Display Options to show "All Methods" (the default is "Only methods with source") so I can investigate as deep as I want to*. This view shows method calls as a hierarchy, so Tester.Program.Main is at the top and that calls other methods, the most expensive of which is the NonCascadingCSSRulesEnforcer.CSSMinifierIntegration.RulesEnforcingCssFileLoader.Load call. While the call to Main accounted for 99.254% of the total work, the call to this Load method accounted for 98.667% (looking at the "Time With Children (%)" column). This means that Main's own work and any other method calls it made account for a very small amount of total work done.

* (If the PDB files from a build are included in the executable folder then the profiler can use these to do line-by-line analysis in a window underneath the method call display shown above. To do this, "line-level and method-level timings" have to be selected when starting the profiling and the source code files will have to be available to the profiler - it will prompt for the location when you double-click on a method. If you don't have the PDB files or the source code then methods can be decompiled, but line level details will not be available).

Method calls are ordered to show the most expensive first, the "HOT" method. This is why that Load method appears first inside the results for Main, it accounts for most of the work that Main does.

So the first easy thing to do is to look for methods whose "HOT" method's "Time With Children" is much lower, this could be an indication that the method itself is quite expensive. It could also mean that it calls several methods which are all quite expensive, but this can still often be a good way to find easy wins.

What jumps out at me straight away is the CharacterProcessorResult..ctor (the class constructor) and its children account for 24.005% of the total run time, with Enum.IsDefined (and its children) accounting for 19.776%. The Enum.IsDefined call is to ensure that a valid enum value is passed to the constructor. Enum.IsDefined uses reflection if you look far enough down, I believe. A few calls to this method should be no big deal, but an instance of this class is used for every stylesheet character that is parsed - we can see that the constructor is called 5,772,750 times (the "Hit Count"). So replacing Enum.IsDefined with if statements for all of the possible enum options should speed things up considerably. Incidentally, 5,772,750 seems like a lot of character parse attempts, it's certainly a lot more content than exists in the source stylesheets, I'll address this further down..

Looking at the screenshot above, there are two other "jumps" in "Time With Children" - one going from ProcessedCharacterGrouped+

More easy optimisations

After making the change outlined above (removing the Enum.IsDefined call from the CharacterProcessorResult constructor), the profiler is re-run to see if I can find any more small-change / big-payoff alterations to make. I did this a few times and identified (and addressed) the following -

The StringNavigator.CurrentCharacter property was being marked as taking a lot of time. This was surprising to me since it doesn't do much, it only returns a character with a particular index from the content string that is being examined. However, the hit count was enormous as this could be called multiple times for each character being examined - so if there were nearly 6m character results, there were many more StringNavigator.CurrentCharacter requests. I changed the string to a character array internally thinking that access to it might be quicker but it didn't make a lot of difference. What did make a difference was to extract the CurrentCharacter value in the StringNavigator's constructor and return that value directly from the property, reducing the number of character array accesses required. What made it even better was to find any method that requested the CurrentCharacter multiple times and change them to request it once, store it in a local variable and use that for subsequent requests. Although each property access is very cheap individually, signficantly reducing the total number of property requests resulted in faster-running code. This is the sort of thing that would have felt like a crazy premature optimisation if I didn't have the profiler highlighting the cost!

The InjectedIdTidyingTextFileLoader's TidySelectorContent method spent a lot of its time in a LINQ "Any" call. The same enumerable data was being looped through many times - changing it to a HashSet (and using the HashSet's Contains method) made this much more efficient. (The HashSet uses the same technique as the Dictionary's key lookup which, as I've written about before in The .Net Dictionary is FAST!, has impressive performance).

The CategorisedCharacterString's constructor also had an Enum.IsDefined call. While this class is instantiated much less often than the CharacterProcessorResult, it was still in the hundreds of thousands range, so it was worth changing too.

The StringNavigator had a method "TryToGetCharacterString" which would be used to determine whether the current selector was a media query (does the current position in the content start with "@media") or whether a colon character was part of a property declaration or part of a pseudo class in a selector (does the current position in the content start with ":hover", ":link", etc..) - but in each case, we didn't really want the next n characters, we just wanted to know whether they were "@media", ":hover" or whatever. So replacing "TryToGetCharacterString" with a "DoesCurrentContentMatch" method meant that less work would be done in the cases where no match was found (this method would exit "early" as soon as it encountered a character than didn't match what it was looking for).

Finally, the ProcessedCharactersGrouper has an array of "CharacterTypesToNotGroup". This class groups adjacent characters that have the same CharacterCategorisationOptions into strings - so if there are characters 'a', '.', 't', 'e', 's', 't' which are all of type "SelectorOrStyleProperty" then these can be grouped into a string "a.test" (with type "SelectorOrStyleProperty"). However, multiple adjacent "}" characters are not combined since they represent the terminations of different style blocks and are not related to each other. So "CloseBrace" is one of the "CharacterTypesToNotGroup" entries. There were only three entries in this array (CloseBrace, OpenBrace and SemiColon). When deciding whether to group characters of the same categorisation together, replacing the LINQ "Contains" method call with three if statements for the particular values improved the performance. I believe that having a named array of values made the code more "self documenting" (it is effectively a label that describes why these three values are being treated differently) but in this case the performance is more important.

The end result of all of these tweaks (all of which were easy to find with ANTS and easy to implement in the code) was a speed improvement of about 3.7 times (measuring the time to process the test content over several runs). Not too shabby!

Algorithms

I still wasn't too happy with the performance yet, it was still taking longer to generate the final rules-validated stylesheet content than I wanted.

Before starting the profiling, I had had a quick think about whether I was doing anything too stupid, like repeating work where I didn't need to. The basic process is that

- Each .less file is loaded (any @imports will be "flattened" later, the referenced content is loaded at this point, though, so that it can be put in the place of the @import later on)

- Each file gets fed through the file-level rules validators

- Source Mapping Marker Ids are inserted

- The "@import flattening" occurs to result in a single combined content string

- This content is fed through the dotLess processor (this translates LESS content into vanilla CSS and minifies the output)

- This is fed through the combined-content-level rules validators

- Any "scope-restricting html tags" are removed

- Source Mapping Marker Ids are tidied

When each file gets fed through the file-level rules validators, the source content is parsed once and the parsed content passed through each validator. So if there are multiple validators that need to be applied to the same content, the content is only parsed once. So there's nothing obvious here.

What is interesting is the "Source Mapping Marker Ids". Since the processor always returns minified content and there is no support for CSS Source Mapping across all browsers (it looks like Chrome 28+ is adding support, see Developing With Sass and Chrome DevTools) I had my processor try to approximate the functionality. Say you have a file "test.css" with the content

html

{

a.test

{

color: #00f;

&:hover { color: #00a; }

}

}

the processor rewrites this as

#test.css_1, html

{

#test.css_3, a.test

{

color: #00f;

#test.css_6, &:hover { color: #00a; }

}

}

which will eventually result (after all of the steps outlined above have been applied) in

#test.css_3,a.test{color:#00f}

#test.css_6,a.test:hover{color:#00a}

This means that when you examine the style in a browser's developer tools, each style can be traced back to where the selector was specified in the source content. A poor man's Source Mapping, if you like.

The problem is that the LESS compiler will actually translate that source-with-marker-ids into

#test.css_1 #test.css_3,

#test.css_1 a.test,

html #test.css_3,

html a.test { color: #00f; }

#test.css_1 #test.css_3 #test.css_6,

#test.css_1 #test.css_3:hover,

#test.css_1 a.test #test.css_6,

#test.css_1 a.test:hover,

html #test.css_3 #test.css_6,

html #test.css_3:hover,

html a.test #test.css_6,

html a.test:hover { color: #00a; }

That's a lot of overhead! There are many selectors here that aren't required in the final content (such as "html #test.css_3", that is neither specific enough to be helpful in the developer tools nor a real style that applies to actual elements). This is what the "Source Mapping Marker Ids are tidied" step deals with.

And this explains why there were nearly 6 million characters being parsed in the stylesheets I've been testing! The real content is getting bloated by all of these Source Mapping Marker Ids. And every level of selector nesting makes it significantly worse.

(To convince myself that I was thinking along the right lines, I ran some timed tests with variations of the processor; disabling the rules validation, disabling the source mapping marker id injection, disabling the marker id tidying.. Whether or not the rules validation was enabled made very little difference. Disabling the marker id injection and tidying made an enormous difference. Disabling just the tidying accounted for most of that difference but if marker ids were inserted and not tidied then the content was huge and full of unhelpful selectors).

Addressing the problems: Limiting Nesting

Since nesting selectors makes things much worse, any way to limit the nesting of selectors could be a signficant improvement. But this would largely involve pushing back the "blame" onto users of the processor, something I want to avoid. There is one obvious way, though. One of the rules is that all stylesheets (other than Resets and Themes sheets) must be wrapped in a "scope-restricting html tag". This means that any LESS mixins or values that are defined within a given file only exist within the scope of the current file, keeping everything self-contained (and so enabling an entire file to be shared between projects, if that file contains the styling for a particular common component, for example). Any values or mixins that should be shared across files should be declared in the Themes sheet. This "html" selector would result in styles that are functionally equivalent (eg. "html a.test:hover" is the same as "a.test:hover" as far as the browser is concerned) but the processor actually has a step to remove these from the final content entirely (so only "a.test:hover" is present in the final content instead of "html a.test:hover").

So if these "html" wrappers will never contribute to the final content, having marker ids for them is a waste of time. And since they should be present in nearly every file, not rewriting them with marker ids should significantly reduce the size of the final content.

Result: The test content is fully processed about 1.8x as fast (times averaged over multiple runs).

Addressing the problems: Shorter Marker Ids

Things are really improving now, but they can be better. There are no more easy ways to restrict the nesting that I can see, but if the marker ids themselves were shorter then the selectors that result from their combination would be shorter, meaning that less content would have to be parsed.

However, the marker ids are the length they are for a reason; they need to include the filename and the line number of the source code. The only way that they could be reduced would be if the shortened value was temporary - the short ids could be used until the id tidying has been performed and then a replacement step could be applied to replace the short ids with the full length ids.

To do this, I changed the marker id generator to generate the "real marker id" and stash it away and to instead return a "short marker id" based on the number of unique marker ids already generated. This short id was a base 63* representation of the number, with a "1" prefix. The reason for the "1" is that before HTML5, it was not valid for an id to begin with a number so I'm hoping that we won't have any pages that have real ids that these "short ids" would accidentally target - otherwise the replacement that swaps out the short ids for real ids on the stylesheets might mess up styles targetting real elements!

* (Base 63 means that the number may be represented by any character from the range A-Z, a-z, 0-9 or by an underscore, this means that valid ids are generated to ensure that characters are not pushed through the LESS compiler that would confuse it).

The earlier example

html

{

a.test

{

color: #00f;

&:hover { color: #00a; }

}

}

now gets rewritten as

html

{

#1A, a.test

{

color: #00f;

#1B, &:hover { color: #00a; }

}

}

which is transformed into

html #1A,

html a.test {

color: #00f;

}

html #1A #1B,

html #1A:hover,

html a.test #1B,

html a.test:hover {

color: #00a;

}

This is a lot better (combining the no-markers on the "html" wrapper and the shorter ids). There's still duplication present (which will get worse as styles are more deeply nested) but the size of the content is growing much less with the duplication.

Result: The test content is fully processed about 1.2x faster after the above optimisation, including the time to replace the shortened ids with the real marker ids.

Conclusion

With the ANTS-identified optimisations and the two changes to the algorithm that processes the content, a total speed-up of about 7.9x has been achieved. This is almost an order of magnitude for not much effort!

In real-world terms, the site style content that I was using as the basis of this test can be fully rebuilt from source in just under 2 seconds, rather than the almost 15 seconds it was taking before. When in the tweak / refresh / tweak / refresh cycle, this makes a huge difference.

And it was interesting to see the "don't optimise before profiling" advice in action once more, along with "avoid premature optimisation". The places to optimise were not where I would have expected (well, certainly not in some of the cases) and the last two changes were not the micro-optimisations that profilers lead you directly to at all; if you blindly follow the profiler then you miss out on the "big picture" changes that the profiler is unaware of.

Posted at 20:37

Non-cascading CSS: A revolution!

("Barely-cascading CSS: A revolution!", "Barely-cascading CSS: A revolution??")

This post was inspired by what I read at www.lispcast.com/cascading-separation-abstraction who in turn took some inspiration from 37signals.com/svn/posts/3003-css-taking-control-of-the-cascade.

Part of what really clicked for me was this

The value of a CSS property is determined by these factors:

- The order of CSS imports

- The number of classes mentioned in the CSS rule

- The order of rules in a particular file

- Any inherits settings

- The tag name of the element

- The class of the element

- The id of the element

- All of the element's ancestors in the HTML tree

- The default styles for the browser

combined with

By using nested LESS rules and child selectors, we can avoid much of the pain of cascading rules. Combining with everything else, we define our styles as mixins (and mixins of mixins)..

and then the guidelines

- Only bare (classless + non-nested) selectors may occur in the reset.

- No bare selectors may occur in the LESS rules.

- No selector may be repeated in the LESS rules.

along with

The box model sucks.. And what happens when I set the width to 100%? What if a padding is cascaded in? Oops.

I propose to boycott the following properties: ..

I must admit that when I first read the whole article I thought it was quite interesting but it didn't fully grab me immediately. But it lingered in the back of my mind for a day or two and the more I thought about it, the more it took hold and I started to think this could offer a potentially huge step forward for styling. Now, I wish I'd put it all together!

What I really think it could possibly offer as the holy grail is the ability to re-use entire chunks of styling across sites. At work this is something that has been posited many times in the past but it's never seemed realistic because the styles are so intermingled between layout specific to one site and the various elements of a control. So even in cases where the basic control and its elements are similar between sites, it's seemed almost implausible to consistently swap style chunks back and forth and to expect them to render correctly, no matter how careful and thoughtful you are with constructing the styles. But I realise now that this is really due almost entirely to the complex cascading rules and its many knock-on effects!

So I'm going to present my interpretation of the guidelines. Now, these aren't fully road-tested yet, I intend to put them to use in a non-trivial project as soon as I get the chance so this is just my initial plan-of-attack..

My interpretation

I'm going to stick with LESS as the CSS processor since I've used it in the past.

A standard (html5-element-supporting) reset sheet is compulsory, only bare selectors may be specified in it

A single "common" or "theme" sheet will be included with a minimum of default styles, only bare selectors may be specified in it

No bare selectors may occur in the non-reset-or-theme rules (a bare selector may occur within a nested selector so long as child selectors are strictly used)

Stylesheets are broken out into separate files, each with a well-defined purpose

All files other than the reset and theme sheets should be wrapped in a body "scope"

No selector may be repeated in the rules

All measurements are described in pixels

Margins, where specified, must always be fully-defined

Border and Padding may not be combined with Width

(Above) This is what I'm trying to avoid!

A standard (html5-element-supporting) reset sheet is compulsory

This is really to take any browser variation out of the equation. You can start by thinking it's something along the lines of "* { margin: 0; padding: 0; }" and then think some more about it and then just go for Eric Meyer's html5-supporting reset. That's my plan at least! :)

A single "common" or "theme" sheet will be included with a minimum of default styles, only bare selectors may be specified in it

I was almost in two minds about this because it feels like it is inserting a layer of cascading back in, but I think there should be one place to declare the background colour and margin for the body, to set the default font colour, to set "font-weight: bold" on strong tags and to set shared variables and mixins.

No bare selectors may occur in the non-reset-or-theme rules (a bare selector may occur within a nested selector so long as child selectors are strictly used)

Bare selectors (eg. "div" rather than "div.AboutMe", or even "div.AboutMe div") are too generic and will almost certainly end up polluting another element's style cascade, which we're trying to avoid.

The reason for the exception around child selectors is that this may be used in places where classes don't exist on all of the elements to be targeted. Or if the bullet point type for a particular list is to be altered, we wouldn't expect to have to rely upon classes - eg.

<div class="ListContainer">

<ul>

<li>Item</li>

<li>Another Item</li>

</ul>

</div>

may be styled with

div.ListContainer

{

> ul > li { list-style-type: square; }

}

This would not affect the second level of items. So if the markup was

<div class="ListContainer">

<ul>

<li>

Item

<ul>

<li>Sub Item<li>

</ul>

</li>

<li>Another Item</li>

</ul>

</div>

then "Sub Item" will not get the "square" bullet point from the above styles. And this is progress! This is what we want, for styles to have to be explicit and not possibly get picked up from layer after layer of possible style matches. If we didn't use child selectors and we didn't want the nested list to have square markers then we'd have to add another style to undo the square setting.

Or, more to point (no pun intended!), if we want to specify a point style for the nested list then adding this style will not be overriding the point style from the outer list, it will be specifying it for the first time (on top of the reset style only), thus limiting the number of places where the style may have come from.

In order for the inner list to have circle markers we need to extend the style block to

div.ListContainer

{

> ul > li

{

list-style-type: square;

> ul > li

{

list-style-type: circle

}

}

}

Stylesheets are broken out into separate files, each with a well-defined purpose

This is for organisational purposes and I imagine that it's a fairly common practice to an extent, but I think it's worth taking a step further in terms of granularity and having each "chunk" or control in its own file. Since pre-processing is going to be applied to combine all of the files together, why not have loads of them if it makes styles easier to organise and find!

I've just had a quick look at Amazon to pick out examples. I would go so far as to have one file NavTopBar.less for the navigation strip across the top, distinct from the NavShopByDepartment.less file. Then a LayoutHomePage file would arrange these elements, with all of the other on that page.

The more segregated styles are, the more chance (I hope!) that these file-size chunks could be shared between projects.

One trick I've been toying with at work is to inject "source ids" into the generated css in the pre-processing step so that when the style content is all combined, LESS-compiled and minified, it's possible to determine where the style originally came from.

For example, I might end up with style in the final output like

#AboutMe.css_6,div.ListContainer>ul>li{list-style-type:square}

where the "#AboutMe.css_6" id relates not to an element but line 6 of the "AboutMe.css" file. It's a sort of poor man's version of Source Mapping which I don't believe is currently available for CSS (which is a pity.. there's a Mozilla feature request for it, though: CSSSourceMap).

I haven't decided yet whether this should be some sort of debug option or whether the overhead of the additional bytes in the final payload are worth the benefit for debugging. There's an argument that if the styles are broken into files and all have tightly targeted selectors then it should be relatively easy to find the source file and line, but sometimes this doesn't feel like the case when you go back to a project six months after you last looked at it!

Another example of a file with a "well-defined purpose" might be to apply a particular grid layout to a particular template, say "CheckoutPageLayout". This would arrange the various elements, but each of the elements within the page would have their own files.

All files other than the reset and theme sheets should be wrapped in a body scope

This is inspired by the Immediately Invoked Function Expression pattern used in Javascript to forcibly contain the scope of variables and functions within. Since we can do the same thing with LESS values and mixins, I thought it made sense to keep them segregated, this way if the same name is used for values or mixins in different files, they won't trample on each other toes.

The idea is simply to nest all statements within a file inside a "body { .. }" declaration - ie.

body

{

// All the content of the file goes here..

}

This will mean that the resulting styles will all be preceded with the "body" tag which adds some bloat to the final content, but I think it's worth it to enable this clean scoping of values and mixins. Any values and mixins that should be shared across files should be defined in the "common" / "theme" sheet.

Just as a quick reminder, the following LESS

@c = red;

.e1 { color: @c; }

body

{

@c = blue;

.e2 { color: @c; }

}

.e3 { color: @c; }

will generate this CSS

.e1 { color: red; }

body .e2 { color: blue; }

.e3 { color: red; }

The @c value inside the body scope applies within that scope and overrides the @c value outside of the scope while inside the scope. Once back out of the scope (after the "body { .. }" content), @c still has the value "red".

It's up for debate whether this definitely turns out to be a good idea. Interesting, so far I've not come across it anywhere else, but it really seems like it would increase the potential for sharing files (that are tightly scoped to particular controls, or other unit of layout / presentation) between projects.

Update (4th June 2013): I've since updated this rule to specify the use of the html tag rather than body as I've written a processor that will strip out the additional tags injected (see Extending the CSS Minifier) and stripping out body tags would mean that selectors could not be written that target elements in a page where a particular class is specified on the body tag.

No selector may be repeated in the rules

In order to try to ensure that no element gets styles specified in more places than it should (that means it should be set no more frequently than in the resets, in the "common" / "theme" sheet and then at most by one other specific selector), class names should not be repeated in the rules.

With segregation of styles in various files, it should be obvious which single file any given style belongs in. To avoid duplicating a class name, LESS nesting should be taken advantage of, so the earlier example of

div.ListContainer

{

border: 1px solid black;

> ul > li { list-style-type: square; }

}

is used instead of

div.ListContainer { border: 1px solid black; }

div.ListContainer > ul > li { list-style-type: square; }

and where previously class names might have been repeated in a CSS stylesheet because similar properties were being set for multiple elements, this should now be done with mixins - eg. instead of

div.Outer, div.Inner

{

float: left;

width: 100%;

}

div.Inner { border: 1px solid black; }

we can use

.FullWidthFloat ()

{

float: left;

width: 100%;

}

div.Outer

{

.FullWidthFloat;

}

div.Inner

{

.FullWidthFloat;

border: 1px solid black;

}

This is clearly straight from lispcast's post, it's a direct copy-and-paste of their guideline 2!

We have to accept that this still can't prevent a given element from having styles set in multiple places, because it's feasible that multiple selectors could point to the same element (if an element has multiple classes then there could easily be multiple selectors that all target it) but this is definitely a good way to move towards the minimum number of rules affecting any one thing.

All measurements are described in pixels

I've been all round the houses in the last few years with measurement units. Back before browsers all handled content-resizing with zoom functionality (before Chrome was even released by Google to shake up the web world) I did at least one full site where every single measurement was in em's - not just the font sizes, but the layout measurements, the borders, the image dimensions, everything! This mean that when the font was sized up or down by the browser, the current zooming effect we all have now was delivered. In all browsers. Aside from having to do some lots of divisions to work out image dimensions (and remembering what the equivalent of 1px was for the borders!), this wasn't all that difficult most of the time.. but where it would become awkward was if a containing element had a font-size which would affect the effective size of an em such that the calculations from pixel to em changed. In other words, cascading styles bit me again!

In more common cases, I've combined pixels and ems - using ems in most places but pixels for particular layout arrangements and images.

But with the current state of things, with the browsers handling zooming of the content regardless of the units specified, I'm seriously suggesting using pixels exclusively. For one thing, it will make width calculations, where required, much much simpler; there can't be any confusion when trying to reason about distances in mixed units!

I'm going to try to pixels-only and see how it goes!

One pattern that I've seen used is to combine "float: left;" and "width: 100%;" when an element has children that are floated, but the element must expand to fully contain all of those children. In the majority of cases I see it used in site builds where I work, the elements that have been floated could have had "display: inline-block" applied instead to achieve the same layout - the use of floats is left over from having to fully support IE6 which wouldn't respect "inline-block" (it was less than a year ago that we managed to finally shackle those IE6 chains! Now it's a graceful-degradation-only option, as I think it should be).

So there's a chance that some allowances will have to be made for 100% widths, but I'm going to start off optimistic and hope that it's not the case until I'm proven wrong (either by myself or someone else trying these rules!).

Update (22nd January 2013): Although I'm currently still aiming for all-pixel measurements for elements, after reading this article The EMs have it: Proportional Media Queries FTW! I think I might get sold on the idea of using em's in media queries.. it seems like it would be an ideal to work with browsers that have been zoomed in sufficiently that they'd look better moving the next layout break point. I need to do some testing with mobile or tablet devices, but it sounds intriguing!

Margins, where specified, must always be fully-defined

This is straight from the lispcast post; it just means that if you specify a margin property that you should use the "margin" property and not "margin-left", "margin-top", etc.. The logic being that if you specify only "margin-left", for example, then the other margin sizes are inherited from the previous declaration that was applied (even if that's only the reset files). The point is that the style is split over multiple declarations and this is what we're trying to avoid.

So instead of

margin-left: 4px;

specify

margin: 0 0 0 4px;

If you require a 4px margin top, bottom, left and right then

margin: 4px;

is fine, the point is to ensure that every dimension is specified at once (which the above will do), specifying the dimensions individually within a single margin value is only required if different sides need different margin sizes!

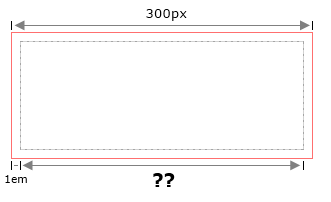

Border and Padding may not be combined with Width

This is also heavily inspired by the lispcast post, it's intended to avoid box model confusion. When I first read it, I had a blast-from-the-past shiver go through me from the dark days where we had to deal with different box models between IE and other browsers but this hasn't been an issue with the proper doc types since IE...6?? So what really concerns us is if we have

<div class="outer">

<div class="inner">

</div>

</div>

and we have the styles

.outer { width: 300px; margin: 0; border: 1px solid black; padding: 0; }

.inner { width: 300px; margin: 0; border: 1px solid black; padding: 10px; }

then we could be forgiven for wanting both elements to be rendered with the same width, but we'll find the "inner" element to appear wider than the "outer" since the width within the border is the content width (as specified by the css style) and then the padding width on top of that.

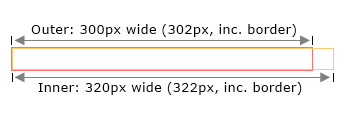

Another way to illustrate would be the with the styles

.outer { width: 300px; margin: 0; border: 1px solid black; padding: 0; }

.inner { width: 100%; margin: 0; border: 1px solid black; padding: 10px; }

Again, the "inner" element juts out of the "outer" since the padding is applied around the 100% width (in this example, the 100% equates to the 300px total width of the "outer" element) which seems counter-intuitive.

So what if we don't use padding at all?

In an ideal world - in terms of the application of styling rules - where an element requires both an explicit width and a padding, this could be achieved with two elements; where the outer element has the width assigned and the inner element has the padding. But, depending upon how we're building content, we mightn't have the ability to insert extra elements. There's also a fair argument that we'd adding elements solely for presentation rather than semantic purpose. So, say we have something like

<div class="AboutMe">

<h3>About</h3>

<p>Content</p>

</div>

instead of specifying

div.AboutMe { width: 200px; padding: 8px; }

maybe we could consider

div.AboutMe { width: 200px; }

div.AboutMe > h3 { margin: 8px 8px 0 8px; }

div.AboutMe > p { margin: 0 8px 8px 8px; }

This would achieve a similar effect but there is a certain complexity overhead. I've used child selectors to indicate the approach that might be used if there were more complicated content; if one of the child elements was a div then we would want to be certain that this pseudo-padding margin only applied to the child div and not one of its descendent divs (this ties in with rule 3's "a bare selector may occur within a nested selector so long as child selectors are strictly used" proviso).

And the border issue hasn't been addressed; setting a border can extend the total rendered width beyond the width specified by css as well! (Also indicated in the example image above).

So I'm tentatively suggesting just not having any styles apply to any element that specifies width and padding and/or border.

This is one of the rules that I feel least confident about and will be considering retracting when I manage to get these into use in a few projects. If the benefit isn't sufficient to outweigh the cost of thinking around it, then it may have to be put out to pasture.

Linting

As I've said, I'm yet to fully use all of these rules in anger on a real, sizable project. But it's something I intend to do very soon, largely to see how well they do or don't all work together.

Something I'm contemplating looking into is adding a pre-processing "lint" step that will try to confirm that all of these rules have been followed. Inspired by Douglas Crockford's JsLint (and I've only just found out that "lint" is a generic term, I didn't know until now where it came from! See "Lint" on Wikipedia).

It could be configured such that if a rule was identified to have not been followed, then an error would be returned in place of any style content - that would ensure that I didn't ignore it!

I'm not sure yet how easy it will or won't be to check for all of these rules, but I'm definitely interested in finding out!

Hopefully I'll post back with a follow-up at some point, possibly with any interesting pre-processing code to go along with it. I think the only way to determine the validity of these rules is with the eating of a whole lot of my own dog food :)

Update (4th June 2013): I've written a couple of related posts - see the Non-cascading CSS: The follow-up and Extending the CSS Minifier. The first has a couple of amendments I've made to these rules after putting them into practice and the second has information about how my CSS Minifier project now has support for injecting into the compiled output the "source ids" mentioned in this post, along with a way to implement rule 5 (html-tag-scoping). I also intend to write a few posts about the mechanisms used to implement these processing steps starting with Parsing CSS.

Posted at 23:04

The Full Text Indexer Post Round-up

This is a compilation of links to articles outlining some of the details of the Full Text Indexer project I put together, just so I could point a link to everything all in one place (like from the BitBucket ReadMe!)

I wrote about the basic building blocks of the Index Generator, went off on a few tangents about how using different key types could allow for searches over data with multi-lingual content (or support Product data that has different descriptions for different web sites, for example) and then came back round to illustrate how I've used the code for this blog's search functionality.

- The Full Text Indexer

- The Full Text Indexer - Adding and Subtracting

- The Full Text Indexer - Going International!

- The Full Text Indexer - Token Breaker and String Normaliser variations (+AutoComplete)

- The Full Text Indexer - Automating Index Generation

- The Full Text Indexer - Structured Queries

- The Full Text Indexer: Source Locations

- Search Term Highlighting with Source Locations

- The Full Text Indexer goes Client-side!

Along the journey, I got to learn a few new things, take advantage of other's research and have fun trying to improve the performance of some of the bottlenecks in the index generation process.

- The .Net Dictionary is FAST!

- An English-language Plurality-handling String Normaliser

- Optimising the Plurality-Handling Normaliser

- A Plurality-Handling Normaliser Correction

- Compiled LINQ Expressions don't serialise :(

- JavaScript Compression (Putting my JSON Search Indexes on a diet)

I also had a chance to revisit the basic immutable list structure that I used from the get-go in this project and improve its performance characteristics as well (again, taking a lot of inspiration from cleverer people who've tackled the same problems before me! :)

The code can be found in the Full Text Indexer BitBucket Repository. I've still got a few ideas I'm contemplating toying with - but I've also got other projects I want to investigate! So we'll just have to see what happens with this next..

Update (5th March 2013): I just can't seem to let this lie! :) I've added another post The Full Text Indexer - Automating Index Generation which demonstrates some new code that will examine your source data type and generate an index for you, all on its own! Easy! (Added to the list above).

Update (14th March 2013): And another! This time about support for structured querying, a way to combine terms with AND, OR, NOT operators. See The Full Text Indexer - Structured Queries. (Added to the list above).

Update (28th March 2013): Documenting an extension to the index data that allow for more performant consecutive term matching: The Full Text Indexer: Source Locations. Followed by a way to utilise this information for Search Term Highlighting with Source Locations. (Added to the list above).

Update (25th July 2013): Inspired by the "The 10 Megabyte Manifesto" and NeoCities, I've developed a way to consume search index data with JavaScript to enable a copy of this blog to be hosted where the searching is done entirely client-side. Read about it at The Full Text Indexer goes client-side! and see it in action live at productiverage.neocities.org! (Added to the list above).

Update (30th July 2013): A follow-up to the "The Full Text Indexer goes client-side" describing how the search index data can be compressed to take up less space on the host: JavaScript Compression (Putting my JSON Search Indexes on a diet). (Added to the list above).

Posted at 18:06

Optimising the Plurality-Handling Normaliser

In the last post I took a first stab at an English-language Plurality-handling String Normaliser to work with a Full Text Indexer research project I'm playing with. I've been using it in conjunction with a normaliser that strips out punctuation from words, replaces accented characters with latin versions (eg. é replaced with e) and lower-cases the content. This originally used a few regular expressions along with some character replacement but I wasn't delighted with the performance, so that was re-written to do one pass through the character and do it all at once and is now running much better. Whether that ties back into my I don't like regular expressions ramble well or not I'm not sure :)

The point at which it does the most work is here:

public bool TryToTransform(string value, out string valueTransformed)

{

if (value == null)

throw new ArgumentNullException("value");

if (_matchType == MatchTypeOptions.SuffixOnly)

{

var matchedSuffix = _values.FirstOrDefault(

suffix => (value.Length > suffix.Length) && value.EndsWith(suffix)

);

if (matchedSuffix != null)

{

valueTransformed =

value.Substring(0, value.Length - matchedSuffix.Length) + _combinedValues;

return true;

}

}

else

{

if (_values.Contains(value))

{

valueTransformed = _combinedValues;

return true;

}

}

valueTransformed = null;

return false;

}

The first approach I took was to take out some of the LINQ - it makes it easy to read but I don't actually know for sure what it's doing! I thought it was worth checking if being more explicit about what I want to do would reap any performance benefit.. So the lengths are explicitly checked first (if matching WholeWord then the value length must match the suffix length, if matching SuffixOnly then the value length must be greater than the suffix length) and then count back through the last characters of the input value and ensure that each one matches the suffix. Not quite as readable but no big deal.

public bool TryToTransform(string value, out string valueTransformed)

{

if (value == null)

throw new ArgumentNullException("value");

foreach (var suffix in _values)

{

if (_matchType == MatchTypeOptions.WholeWord)

{

if (value.Length != suffix.Length)

continue;

}

else if (!(value.Length > suffix.Length))

continue;

var matchedSuffixLength = 0;

for (var index = 0; index < suffix.Length; index++)

{

if (value[value.Length - (index + 1)] != suffix[suffix.Length - (index + 1)])

{

matchedSuffixLength = 0;

break;

}

matchedSuffixLength++;

}

if (matchedSuffixLength == 0)

continue;

valueTransformed =

value.Substring(0, value.Length - matchedSuffixLength) + _combinedValues;

return true;

}

valueTransformed = null;

return false;

}

Running a few loops of the All English Words data I mentioned in the last post saw an performance improvement (when run in release mode) over 3x - success!

But with what I've been learning about LINQ Expressions over the last year or so (culminating in The artist previously known as the AutoMapper-By-Constructor and The CompilableTypeConverter BitBucket repository) I couldn't help wondering if writing code that would generate expressions that unrolled the comparison loop and pre-generated the combined suffix extensions might not be faster. The only way to find out is to try!

The idea is that it would effectively generate code along the lines of:

if ((value.length > 1)

&& (value[value.length - 1] == 'y'))

return value.substring(0, value.length - 1) + "[y][ies]";

if ((value.length > 3)

&& (value[value.length - 3] == 'i')

&& (value[value.length - 2] == 'e')

&& (value[value.length - 1] == 's'))

return value.substring(0, value.length - 3) + "[y][ies]";

for all of the various plurality suffixes but while still maintaining the ability to easily define new suffix sets. And so, without further ado, I ended up with this:

/// <summary>

/// This will match common strings where one is the plural and the other the singular version

/// of the same word. It not intended to be perfect and may match a few false positives, but

/// it should catch most of the most common cases.

/// </summary>

[Serializable]

public class EnglishPluralityStringNormaliser : IStringNormaliser

{

private Func<string, string> _normaliser;

private IStringNormaliser _optionalPreNormaliser;

private PreNormaliserWorkOptions _preNormaliserWork;

public EnglishPluralityStringNormaliser(

IEnumerable<PluralEntry> plurals,

IEnumerable<string> fallbackSuffixes,

IStringNormaliser optionalPreNormaliser,

PreNormaliserWorkOptions preNormaliserWork)

{

if (plurals == null)

throw new ArgumentNullException("pluralEntries");

if (fallbackSuffixes == null)

throw new ArgumentNullException("fallbackSuffixes");

var allPreNormaliserOptions = (PreNormaliserWorkOptions)0;

foreach (PreNormaliserWorkOptions option in

Enum.GetValues(typeof(PreNormaliserWorkOptions)))

{

allPreNormaliserOptions = allPreNormaliserOptions | option;

}

if ((preNormaliserWork & allPreNormaliserOptions) != preNormaliserWork)

throw new ArgumentOutOfRangeException("preNormaliserWork");

_normaliser = GenerateNormaliser(plurals, fallbackSuffixes);

_optionalPreNormaliser = optionalPreNormaliser;

_preNormaliserWork = preNormaliserWork;

}

public EnglishPluralityStringNormaliser(

IStringNormaliser optionalPreNormaliser,

PreNormaliserWorkOptions preNormaliserWork

) : this(DefaultPlurals, DefaultFallback, optionalPreNormaliser, preNormaliserWork) { }

public EnglishPluralityStringNormaliser()

: this(null, PreNormaliserWorkOptions.PreNormaliserDoesNothing) { }

public string GetNormalisedString(string value)

{

if (value == null)

throw new ArgumentNullException("value");

// If an additional normaliser was specified in the constructor then process the

// string with that first (eg. a normaliser that removes punctuation from values

// may be beneficial depending upon the content that may be passed in)

if (_optionalPreNormaliser != null)

value = _optionalPreNormaliser.GetNormalisedString(value);

if ((_preNormaliserWork & PreNormaliserWorkOptions.PreNormaliserTrims)

!= PreNormaliserWorkOptions.PreNormaliserTrims)

value = value.Trim();

if (value == "")

return "";

// We have to lower case the trimmed value since the suffixes are all stored as

// lower case values

if ((_preNormaliserWork & PreNormaliserWorkOptions.PreNormaliserLowerCases)

!= PreNormaliserWorkOptions.PreNormaliserLowerCases)

value = value.ToLower();

return _normaliser(value);

}

public bool Equals(string x, string y)

{

if (x == null)

throw new ArgumentNullException("x");

if (y == null)

throw new ArgumentNullException("y");

return GetNormalisedString(x) == GetNormalisedString(y);

}

public int GetHashCode(string obj)

{

if (obj == null)

throw new ArgumentNullException("obj");

return GetNormalisedString(obj).GetHashCode();

}

private static Func<string, string> GenerateNormaliser(

IEnumerable<PluralEntry> plurals,

IEnumerable<string> fallbackSuffixes)

{

if (plurals == null)

throw new ArgumentNullException("pluralEntries");

if (fallbackSuffixes == null)

throw new ArgumentNullException("fallbackSuffixes");

// Build up if statements for each suffix - if a match is found, return the input

// value with the matched suffix replaced with a combination of all the other

// suffixes in PluralEntry

var result = Expression.Parameter(typeof(string), "result");

var endLabel = Expression.Label(typeof(string));

var valueTrimmed = Expression.Parameter(typeof(string), "valueTrimmed");

var expressions = new List<Expression>();

foreach (var plural in plurals)

{

if (plural == null)

throw new ArgumentException("Null reference encountered in plurals set");

foreach (var suffix in plural.Values)

{

var assignNormalisedValueToResult = Expression.Assign(

result,

GenerateStringConcatExpression(

GenerateRemoveLastCharactersExpression(valueTrimmed, suffix.Length),

Expression.Constant(

CreateSuffixExtension(plural.Values),

typeof(string)

)

)

);

expressions.Add(

Expression.IfThen(

GeneratePredicate(suffix, valueTrimmed, plural.MatchType),

Expression.Block(

assignNormalisedValueToResult,

Expression.Return(endLabel, result)

)

)

);

}

}

// If any fallback suffixes are specified, add a statement to append them if none

// of the PluralEntry matches are made

fallbackSuffixes = TidyStringList(fallbackSuffixes, v => v.Trim().ToLower());

if (fallbackSuffixes.Any())

{

expressions.Add(

Expression.Assign(

result,

GenerateStringConcatExpression(

valueTrimmed,

Expression.Constant(

CreateSuffixExtension(fallbackSuffixes),

typeof(string)

)

)

)

);

}

else

expressions.Add(Expression.Assign(result, valueTrimmed));

// Add the return-point label, configured to return the string value in "result"

expressions.Add(Expression.Label(endLabel, result));

return Expression.Lambda<Func<string, string>>(

Expression.Block(

new[] { result },

expressions

),

valueTrimmed

).Compile();

}

/// <summary>

/// Generate an expression that determines whether a string parameter matches a specified

/// suffix / matchType combination

/// </summary>

private static Expression GeneratePredicate(

string suffix,

ParameterExpression valueTrimmed,

MatchTypeOptions matchType)

{

if (string.IsNullOrWhiteSpace(suffix))

throw new ArgumentException("Null/blank suffix specified");

if (valueTrimmed == null)

throw new ArgumentNullException("valueTrimmed");

if (!Enum.IsDefined(typeof(MatchTypeOptions), matchType))

throw new ArgumentOutOfRangeException("matchType");

suffix = suffix.Trim();

var conditionElements = new List<Expression>();

var lengthProperty = typeof(string).GetProperty("Length");

var indexedProperty = typeof(string).GetProperties().First(

p => (p.GetIndexParameters() ?? new ParameterInfo[0]).Any()

);

if (matchType == MatchTypeOptions.SuffixOnly)

{

conditionElements.Add(

Expression.GreaterThan(

Expression.Property(valueTrimmed, lengthProperty),

Expression.Constant(suffix.Length, typeof(int))

)

);

}

else

{

conditionElements.Add(

Expression.Equal(

Expression.Property(valueTrimmed, lengthProperty),

Expression.Constant(suffix.Length, typeof(int))

)

);

}

for (var index = 0; index < suffix.Length; index++)

{

conditionElements.Add(

Expression.Equal(

Expression.Constant(suffix[index], typeof(char)),

Expression.Property(

valueTrimmed,

indexedProperty,

Expression.Subtract(

Expression.Property(valueTrimmed, lengthProperty),

Expression.Constant(suffix.Length - index, typeof(int))

)

)

)

);

}

return CombineExpressionsWithAndAlso(conditionElements);

}

private static Expression CombineExpressionsWithAndAlso(

IEnumerable<Expression> expressions)

{

if (expressions == null)

throw new ArgumentNullException("expressions");

var expressionsTidied = new List<Expression>();

foreach (var expression in expressions)

{

if (expression == null)

throw new ArgumentException("Null reference encountered in expressions set");

expressionsTidied.Add(expression);

}

if (!expressionsTidied.Any())

throw new Exception("No entries in expressions set");

else if (expressionsTidied.Count == 1)

return expressionsTidied[0];

var reducedExpressions = new List<Expression>();

for (var index = 0; index < expressionsTidied.Count; index += 2)

{

var expression = expressionsTidied[index];

if (index < (expressionsTidied.Count - 1))

{

var expressionNext = expressionsTidied[index + 1];

reducedExpressions.Add(Expression.AndAlso(expression, expressionNext));

}

else

reducedExpressions.Add(expression);

}

return (reducedExpressions.Count == 1)

? reducedExpressions[0]

: CombineExpressionsWithAndAlso(reducedExpressions);

}

/// <summary>

/// The value Expression must represent a non-null string that is as at least as long as

/// the specified length or an exception will

/// be thrown upon exection

/// </summary>

private static Expression GenerateRemoveLastCharactersExpression(

Expression value,

int length)

{

if (value == null)

throw new ArgumentNullException("value");

if (length < 0)

throw new ArgumentOutOfRangeException("length");

return Expression.Call(

value,

typeof(string).GetMethod("Substring", new[] { typeof(int), typeof(int) }),

Expression.Constant(0),

Expression.Subtract(

Expression.Property(value, typeof(string).GetProperty("Length")),

Expression.Constant(length, typeof(int))

)

);

}

/// <summary>

/// The values Expressions must represent strings otherwise the expression will fail when

/// executed

/// </summary>

private static Expression GenerateStringConcatExpression(params Expression[] values)

{

if (values == null)

throw new ArgumentNullException("values");

var valuesTidied = values.ToList();

if (!valuesTidied.Any())

throw new ArgumentException("No entries in values set");

if (valuesTidied.Any(v => v == null))

throw new ArgumentException("Null reference encountered in values set");

return Expression.Call(

typeof(string).GetMethod("Concat", new[] { typeof(string[]) }),

Expression.NewArrayInit(

typeof(string),

valuesTidied

)

);

}

private static string CreateSuffixExtension(IEnumerable<string> suffixes)

{

if (suffixes == null)

throw new ArgumentNullException("suffixes");

var suffixesTidied = suffixes.ToList();

if (!suffixesTidied.Any())

throw new ArgumentException("No entries in suffixes set");

if (suffixesTidied.Any(s => string.IsNullOrWhiteSpace(s)))

throw new ArgumentException("Null/blank entry encountered in suffixes set");

return "|" + string.Join("|", suffixesTidied.Select(s => s.Trim()));

}

/// <summary>

/// Given a set of values, ensure that none are null and return them de-duplicated after

/// having been pushed through a string manipulation. This will throw an exception for

/// null arguments or if any null value is encountered in the values set.

/// </summary>

private static IEnumerable<string> TidyStringList(

IEnumerable<string> values,

Func<string, string> transformer)

{

if (values == null)

throw new ArgumentNullException("values");

if (transformer == null)

throw new ArgumentNullException("transformer");

var valuesTidied = new List<string>();

foreach (var value in values)

{

if (value == null)

throw new ArgumentException("Null entry encountered in values");

var valueToStore = transformer(value);

if (!valuesTidied.Contains(valueToStore))

valuesTidied.Add(valueToStore);

}

return valuesTidied.Distinct();

}

public readonly static IEnumerable<string> DefaultFallback = new[] { "ses", "es", "s" };

public readonly static PluralEntry[] DefaultPlurals = new[]

{

// eg. formula / formulae / formulas

new PluralEntry(new[] { "ula", "ulae", "ulas" }, MatchTypeOptions.SuffixOnly),

// eg. category / categories

new PluralEntry(new[] { "y", "ies" }, MatchTypeOptions.SuffixOnly),

// eg. cactus / cactii

new PluralEntry(new[] { "us", "ii" }, MatchTypeOptions.SuffixOnly),

// eg. child / children

new PluralEntry(new[] { "ld", "ldren" }, MatchTypeOptions.SuffixOnly),

// eg. medium / media

new PluralEntry(new[] { "ium", "ia" }, MatchTypeOptions.SuffixOnly),

// Common special cases that have to come before the "ses", es", "s" form

new PluralEntry(new[] { "index", "indexes", "indices" }, MatchTypeOptions.WholeWord),

new PluralEntry(new[] { "matrix", "matrices" }, MatchTypeOptions.WholeWord),

new PluralEntry(new[] { "vertex", "vertices" }, MatchTypeOptions.WholeWord),

// eg. Abacuses, matching "s" here means we must use "ses", "es" AND "s" as fallbacks

new PluralEntry(new[] { "ses", "es", "s" }, MatchTypeOptions.SuffixOnly),

// Other common special cases

new PluralEntry(new[] { "datum", "data" }, MatchTypeOptions.WholeWord),

new PluralEntry(new[] { "man", "men" }, MatchTypeOptions.WholeWord),

new PluralEntry(new[] { "woman", "women" }, MatchTypeOptions.WholeWord)

};

[Serializable]

public class PluralEntry

{

public PluralEntry(IEnumerable<string> values, MatchTypeOptions matchType)

{

if (values == null)

throw new ArgumentNullException("values");

if (!Enum.IsDefined(typeof(MatchTypeOptions), matchType))

throw new ArgumentOutOfRangeException("matchType");

var valuesTidied = TidyStringList(values, v => v.Trim().ToLower());

if (!valuesTidied.Any())

throw new ArgumentException("No entries in values set");

Values = valuesTidied.Distinct().ToList().AsReadOnly();

MatchType = matchType;

}

/// <summary>

/// This will never be null or an empty set, nor will it contain any null, empty or

/// duplicate values (all values are lower-cased and trimmed)

/// </summary>

public ReadOnlyCollection<string> Values { get; private set; }

public MatchTypeOptions MatchType { get; private set; }

}

[Serializable]

public enum MatchTypeOptions

{

SuffixOnly,

WholeWord

}

}

It still takes me a far while to craft the generation LINQ Expressions but I do think that once written the resulting code is actually fairly easy to follow. For each suffix in a PluralEntry (where the PluralEntry might describe the group y, ies as a SuffixOnly extension - as clearly seen in the last post) a predicate is generated with LINQ Expressions that compares the input string's length and each of the characters that could correlate with the suffix string. Very similar to inside the loop of the first optimisation at the top of this post. An IfThen Expression will consider this predicate and - if matched - generate result that removes the suffix from the input value and appends a combined string consisting of the suffix values in the group before jumping to the end of the Expression block (effectively "returning" out of the block). Again, just like the setting of the valueTransformed string in the earlier code. If none of the suffix groups are found to match then it will append a default set of suffixes, so that cat is transformed to cat|s|es|ses in order to match cats which would also be transformed to cat|s|es|ses, for example.

There are couple of oddities in the code - I struggled for a while to find a nice way to access characters by index in a string since the ArrayAccess Expressions can't be used since a string isn't technically an array of characters; you have to first use reflection to get hold of the indexed property of the string type, there's only one so that must be the property we want to access! When comparing the string length and the individual characters, the Expressions are combined with the AndAlso Expression as this ensures that short-circuiting of the conditions is utilised - as soon as one condition is not met, any further ones are ignored.

This brought on another performance improvement of over 3x - success again!

Additional tweaks